Navigating the ML deployment interface

When you open your ML deployment, you can perform management and monitoring activities, and use it to create predictions on datasets.

Open an ML deployment from the catalog. There are navigation options for the following:

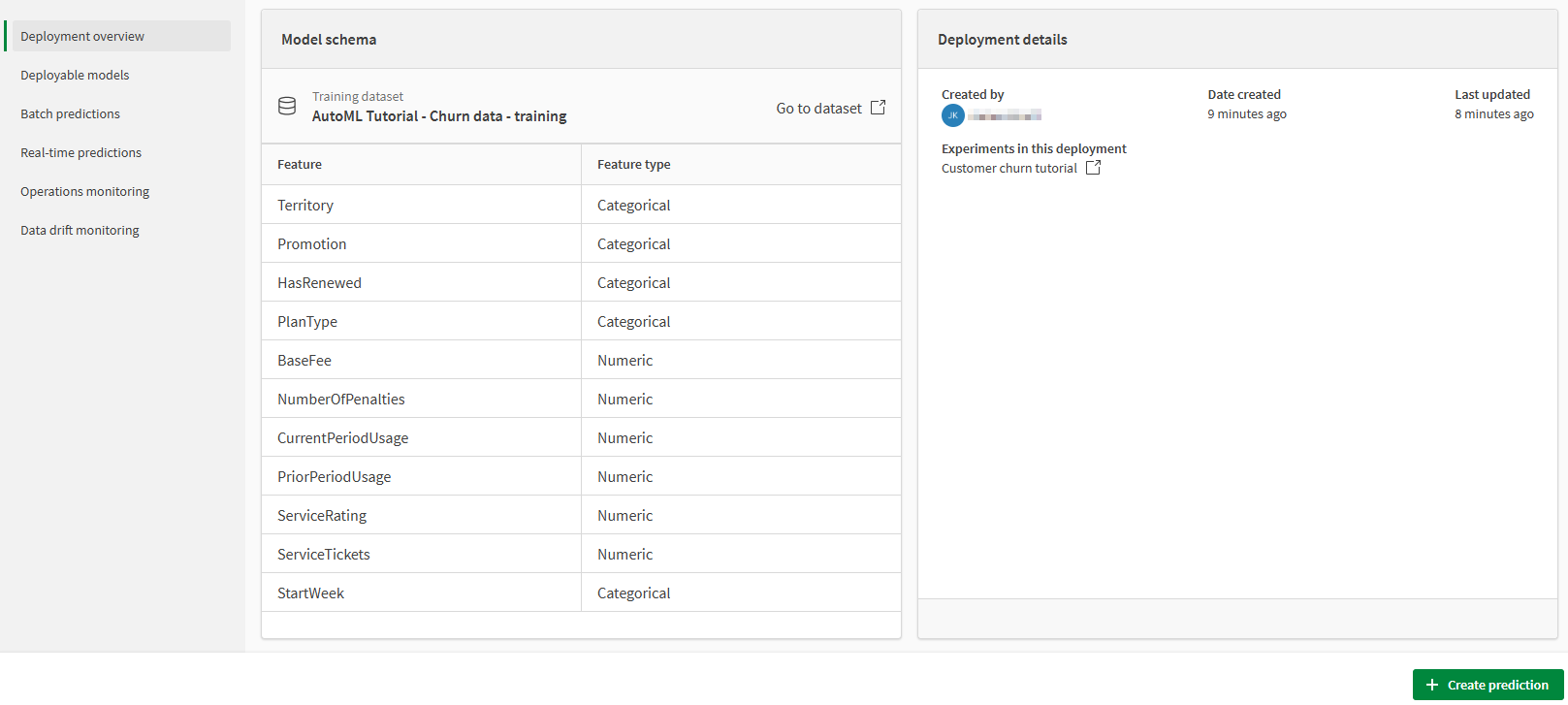

Deployment overview

The Deployment overview shows the features used in the model training and details for the deployment.

Overview of the ML deployment

If the default model in the deployment is inactive, you are notified in a banner at the top of the screen. If you have the correct permissions, you can activate the model by clicking Activate model. For more information, see:

Deployable models

The Deployable models pane is where you can manage model aliases and configure which models are used for predictions.

For more information, see Using multiple models in your ML deployment.

Deployable models pane in Qlik Predict

![Click to view full size 'Deployable models' pane in [[[Undefined variable CommonComponents.AutoML]]] ML deployment interface](../../Resources/Images/ui-automl_available-models.png)

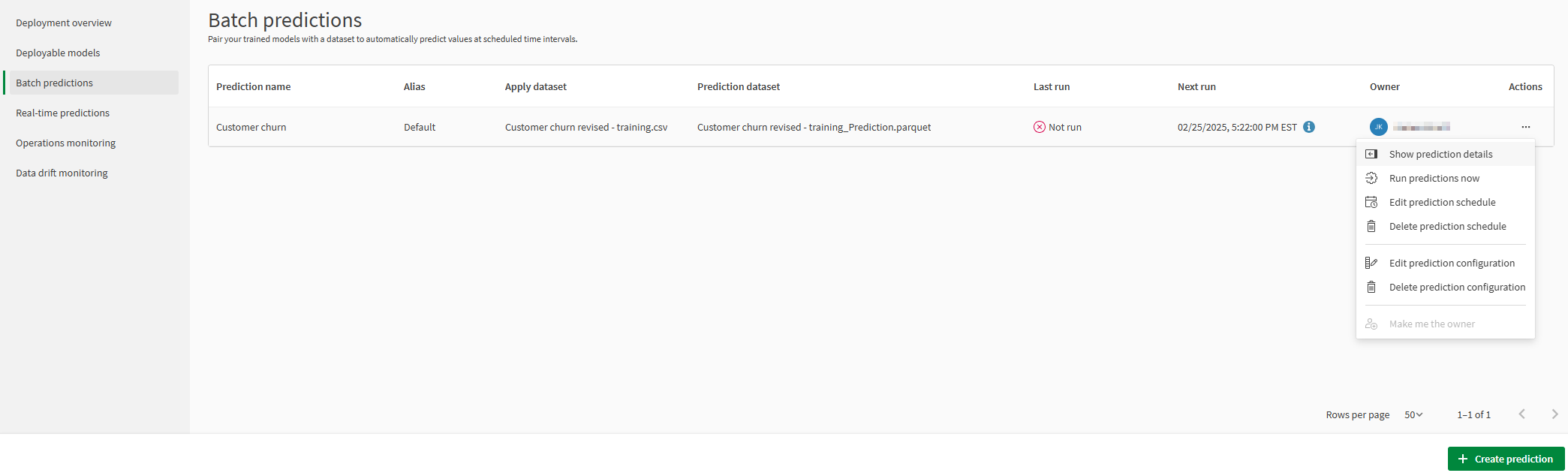

Batch predictions

In Batch predictions, you can manage and run batch predictions using the ML deployment. Click Create prediction to create a prediction configuration, from which you run batch predictions. You can have several prediction configurations for an ML deployment.

You can use the Actions menu in the table to:

-

View and edit details for prediction configurations

-

Run predictions from existing configurations

-

Edit and delete configurations

-

Create, edit, and delete prediction schedules for an existing configuration

Batch predictions with an overview and Actions menu expanded

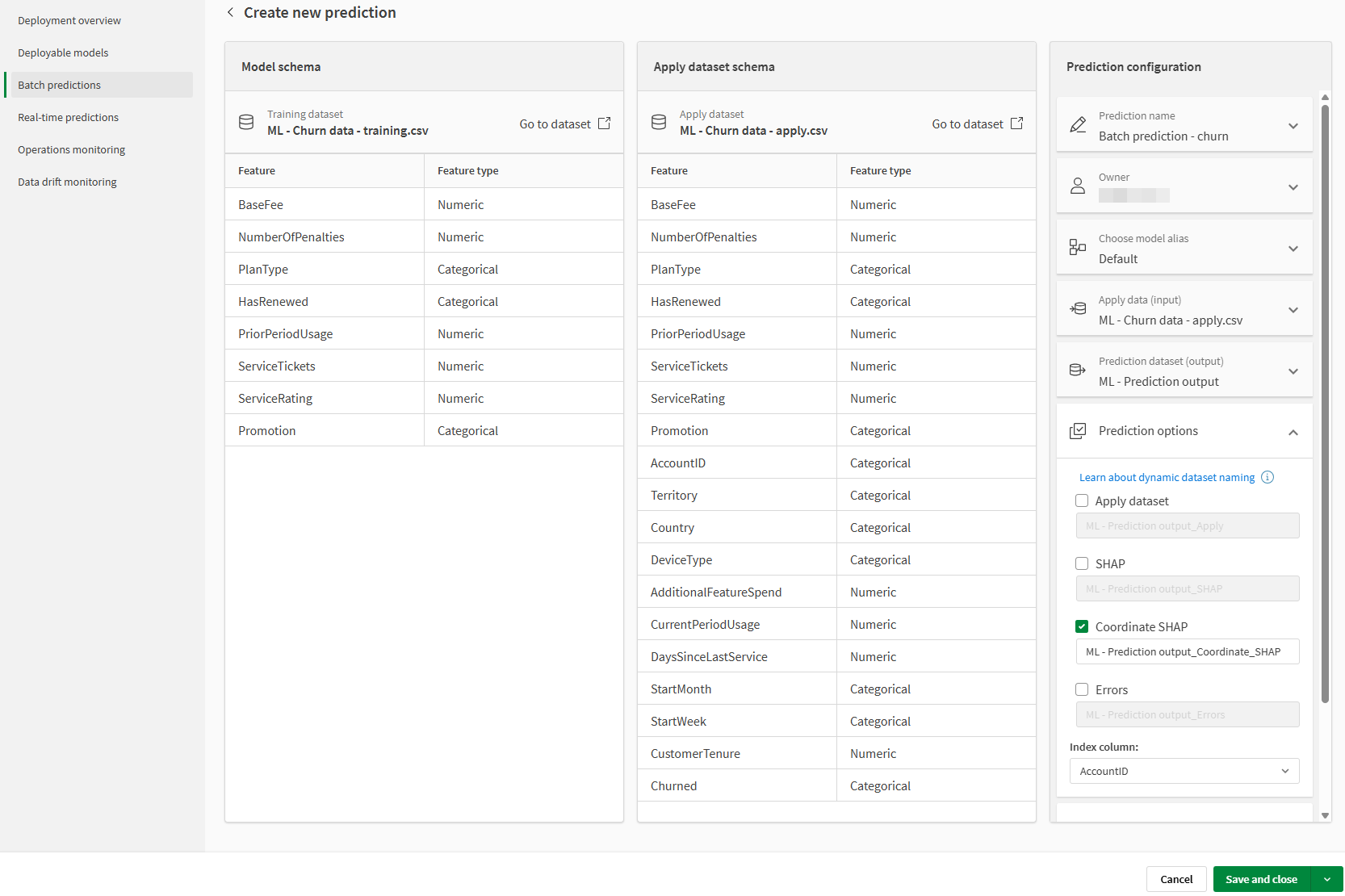

If you select Edit prediction configuration, the Prediction configuration pane is opened. You can compare the model and apply dataset schema side-by-side as you edit the prediction.

If your deployment contains a time series model, you can also view important details about your defined time series problem, such as the forecast window, time step, and apply window.

Batch predictions with side pane for prediction configuration

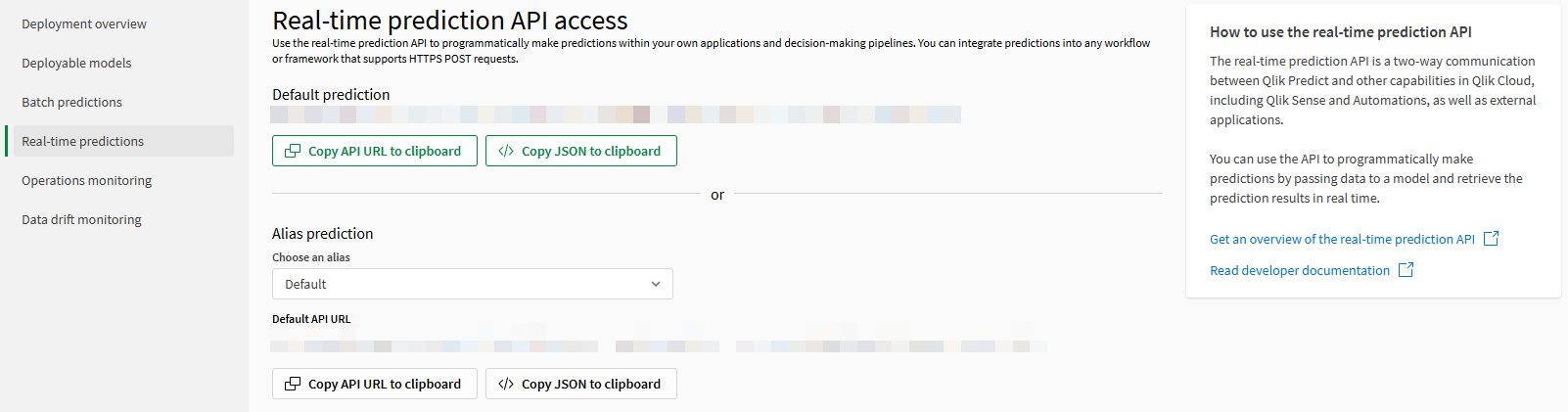

Real-time predictions

The Real-time predictions pane gives you access to the real-time prediction endpoint in the Machine Learning API. If the default model in the ML deployment is activated for making predictions, this pane is visible.

For information about creating real-time predictions, see Creating real-time predictions.

The real-time predictions API is deprecated and replaced by the real-time prediction endpoint in the Machine Learning API. The functionality itself is not being deprecated. For future real-time predictions, use the real-time prediction endpoint in the Machine Learning API. For help with migrating from the real-time predictions API to the Machine Learning API, refer to the migration guide on the Qlik Cloud developer portal.

Real-time predictions pane

Operations monitoring

In Operations monitoring, you can monitor usage information for the ML deployment. You can view details about how the ML deployment is being used, such as how many prediction events succeed or fail, and how prediction events are typically triggered.

For more information, see Monitoring deployed model operations.

Operations monitoring pane in ML deployment

Data drift monitoring

In Data drift monitoring, you can monitor data drift for the ML deployment.

With data drift monitoring, you can assess changes in the distribution of features in the source model. When significant drift is observed, it is recommended that you retrain or re-configure your model to account for the latest data, which may indicate new patterns in data trends.

For more information, see Monitoring data drift in deployed models.

Data drift monitoring pane in ML deployment