FTP and SFTP

This page covers reading from different file types using the FTP and SFTP connectors.

Text files and CSV files

The FTP and SFTP connectors allow reading data from text files (e.g. CSV) and writing to text files. The connector supports the following modes:

- Raw mode: lines in the file are handled as strings (one string per line).

- CSV mode (comma separated values): lines in the file are delimited with e.g. a comma or semi colon, and are converted to an array (list) when reading from the file.

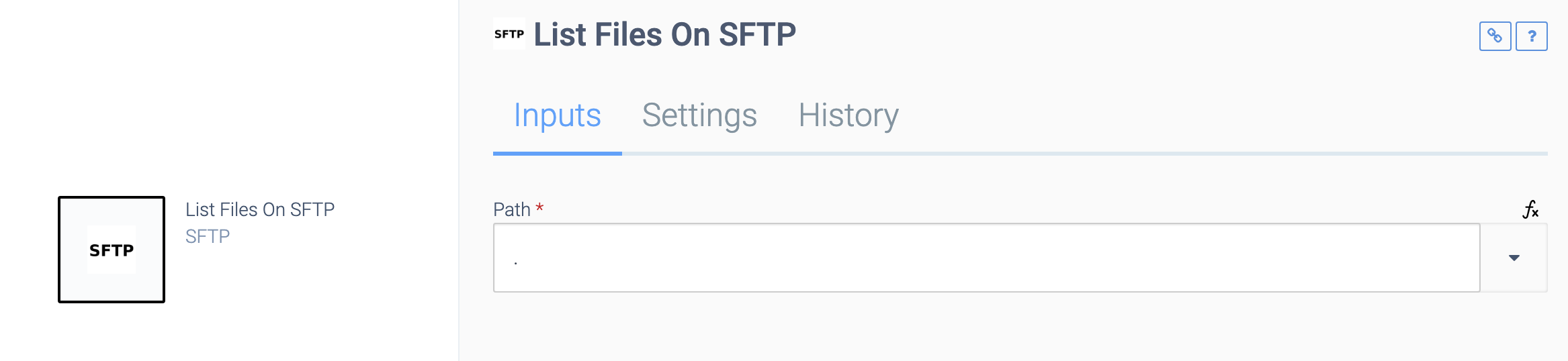

Listing files

Use .

for the Path to list the files in the default folder (as configured on the SFTP server for the current user) or /

for the root folder:

A List Files On SFTP block.

Example path for listing the files in a folder under the default folder:

./folder

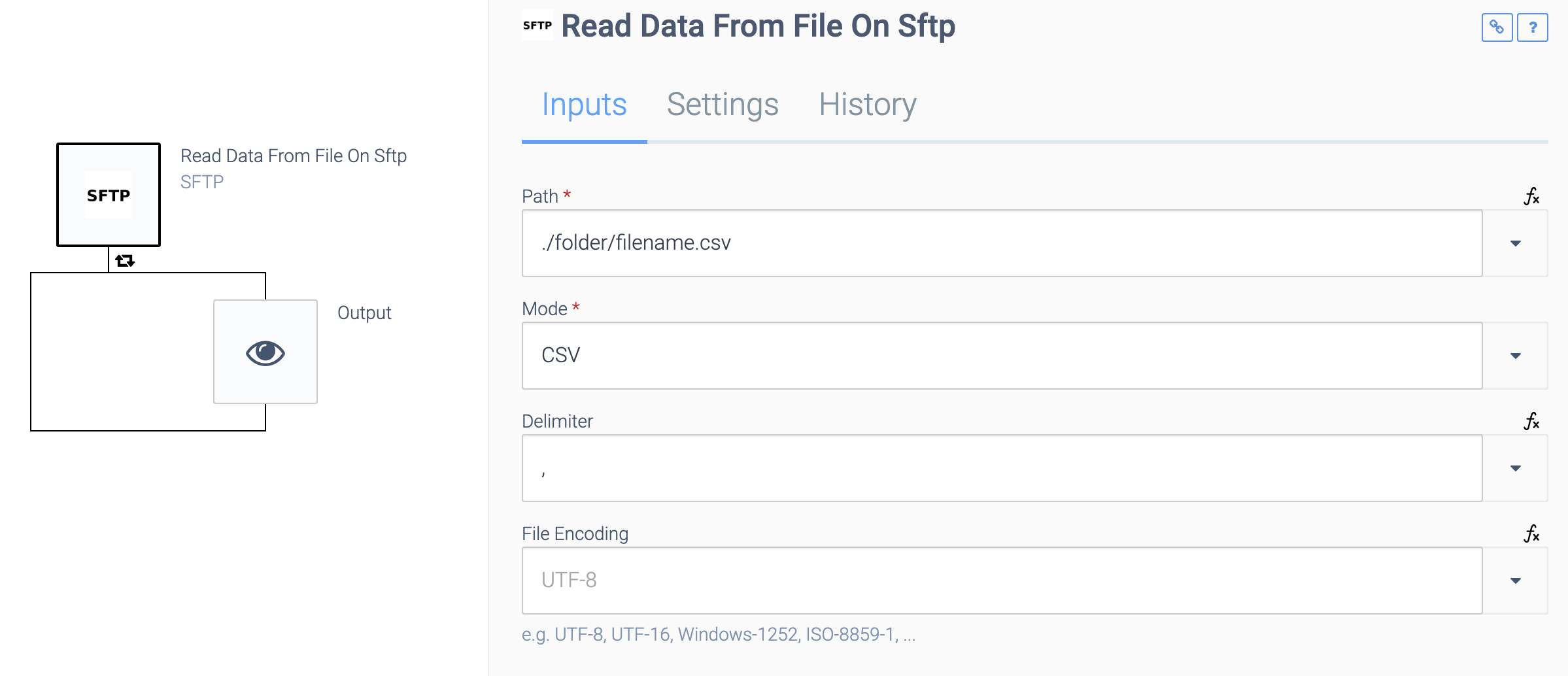

Reading data from files

Example of reading data from a CSV file:

an automation that reads and outputs data as an array.

The data from the file will be a list (array), where each item in the list represents one line.

For Raw mode files, each line will be a string. Example:

[

"first line of file",

"second line of file"

]

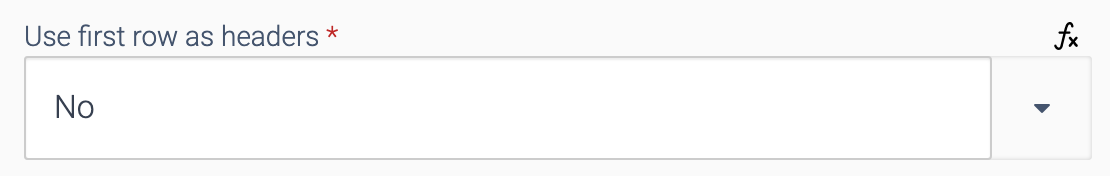

For CSV files, the structure of each line will depend on the setting Use the first row as headers

:

The Use the first row as headers

setting.

If Use first row as headers

is set to Yes: each line will be an object with the values from the CSV line, and the header line is used for keys. Example:

[

{

"first row header 1": "second row value 1",

"first row header 2": "second row value 2"

},

{

"first row header 1": "third row value 1",

"first row header 2": "third row value 2"

}

]

If Use first row as headers

is set to No: each line will be a list (array) with the values from the CSV line. Example:

[

[

"first row value 1",

"first row value 2"

],

[

"second row value 1",

"second row value 2"

]

]

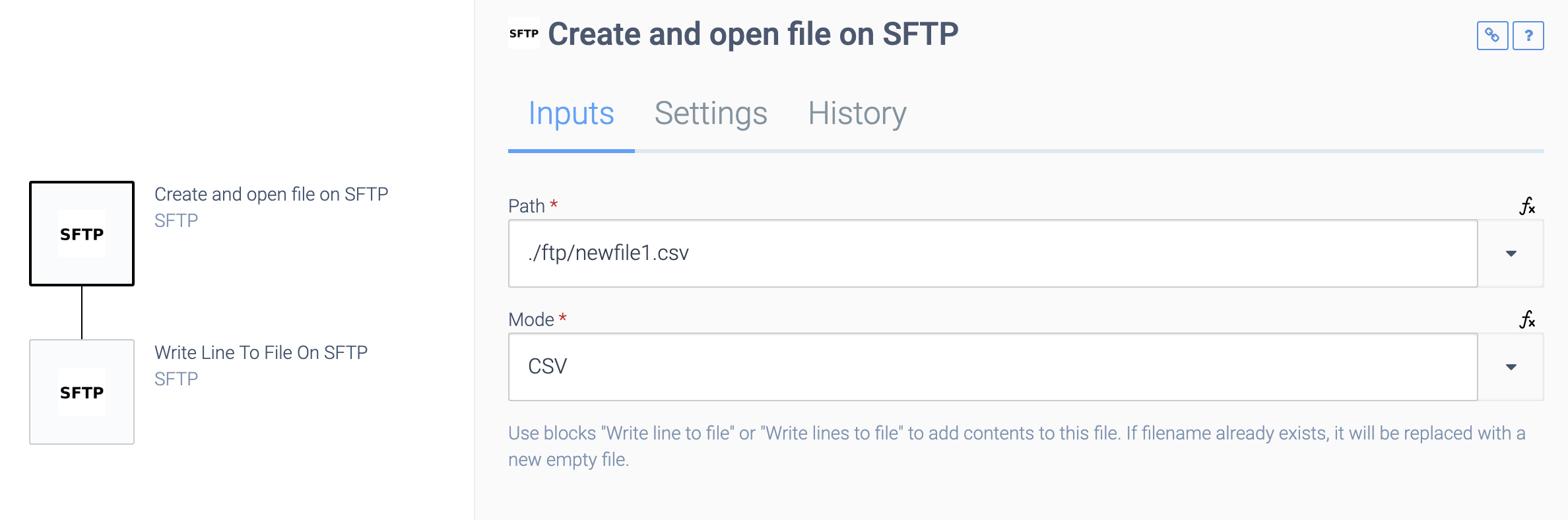

Writing data to a file

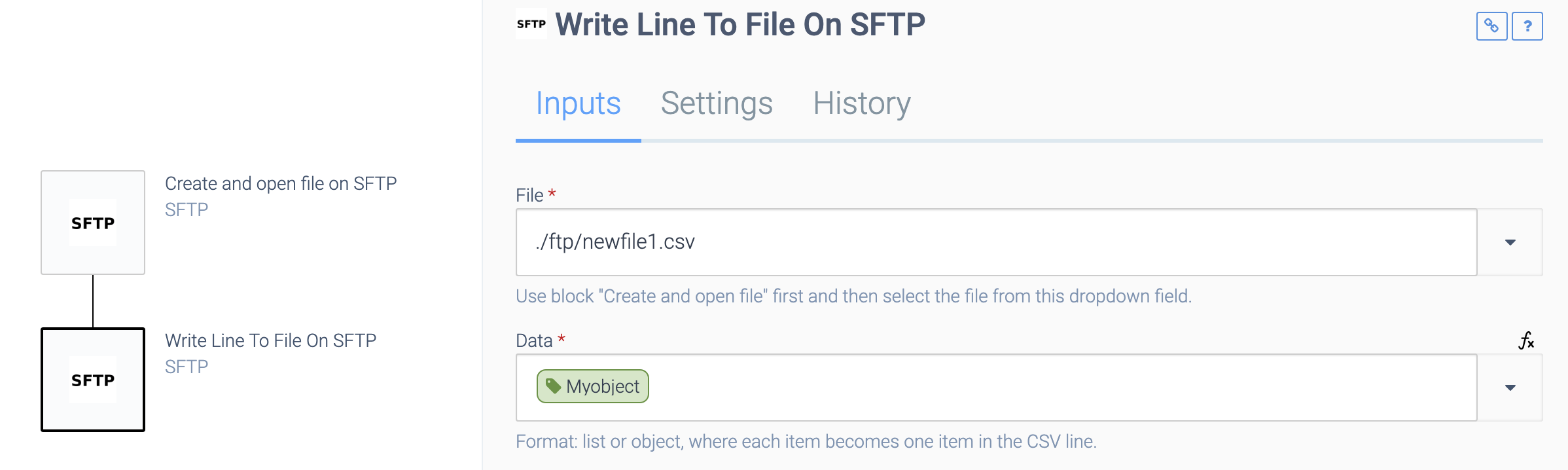

Create a new file using the block Create and open file

. Next, use the block Add line to file

or Add lines to file

, to add data to the newly opened file:

an automation that writes data to a file.

When adding one line to the file, the data should be a string for Raw mode, and it should be an object or list for CSV mode:

an automation that writes data to a file.

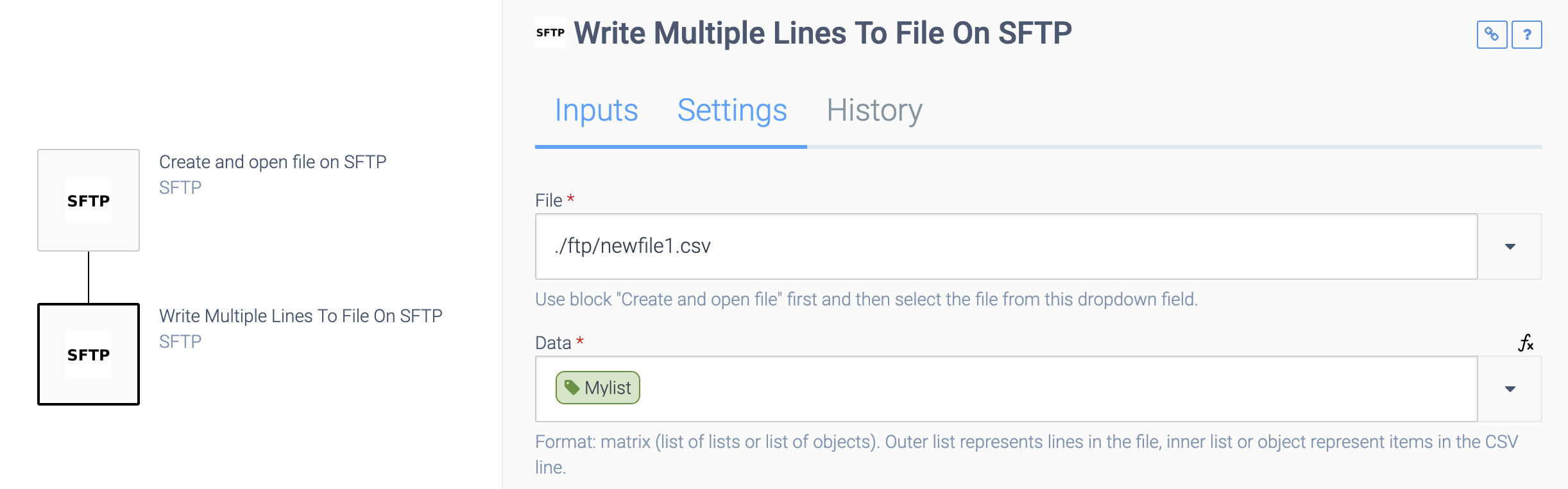

When adding multiple lines at once to a file, the data should be a list (array) where each item represents one line. The item should be a string for Raw mode and it should be an object or list for CSV mode:

Adding multiple objects at once.

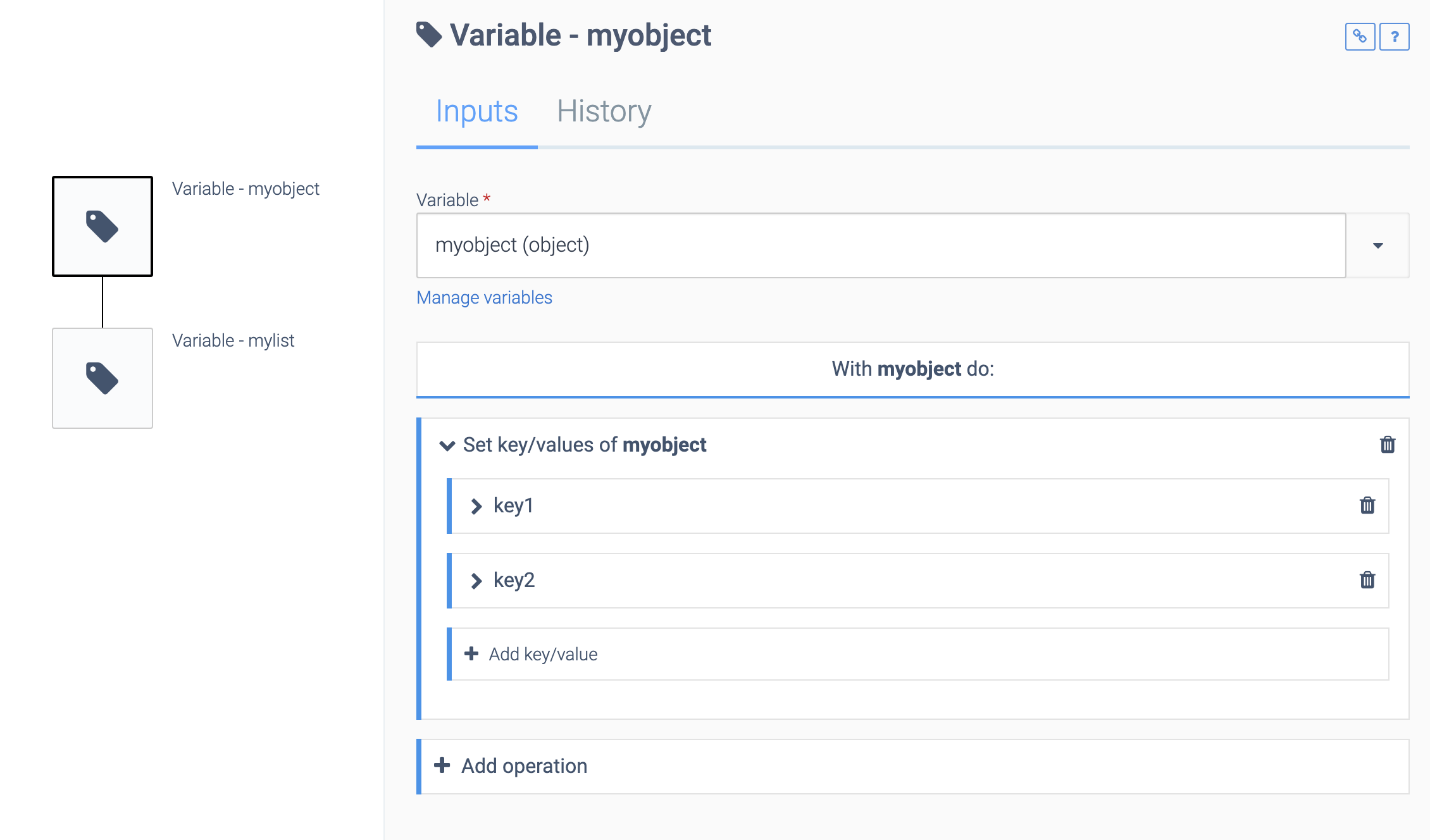

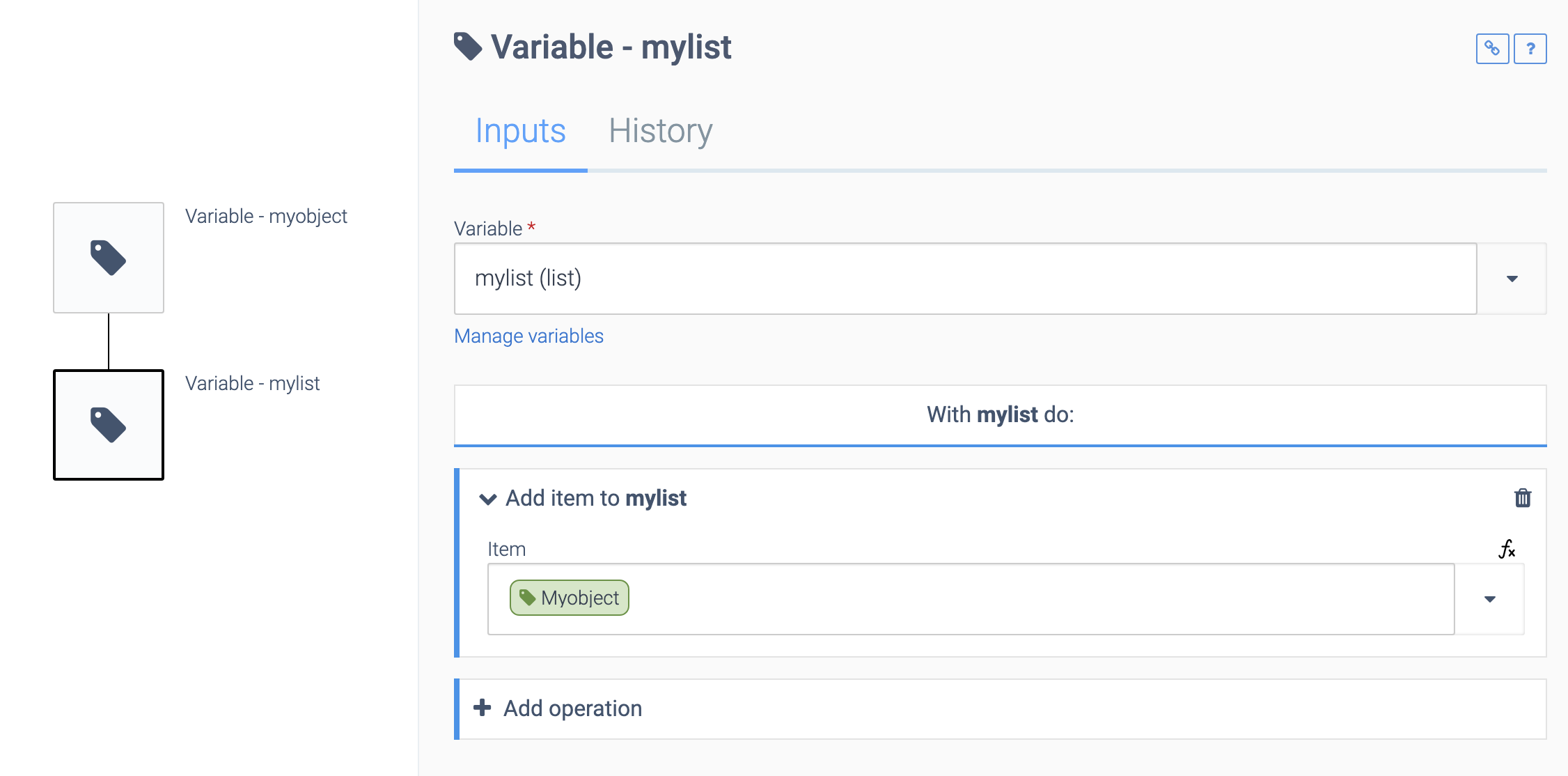

Here's how the variable MyList was created for the above example:

Formatting an object.

Formatting a list.

Appending data to a file

Adding data to an existing file is currently not supported. Instead, create an automation that performs the following steps:

- Open the existing file

- Create a new file

- Read all the data from the existing (old) file and add it to the new file

- Add the new data to the new file

- Delete the existing (old) file

Handling large amounts of data

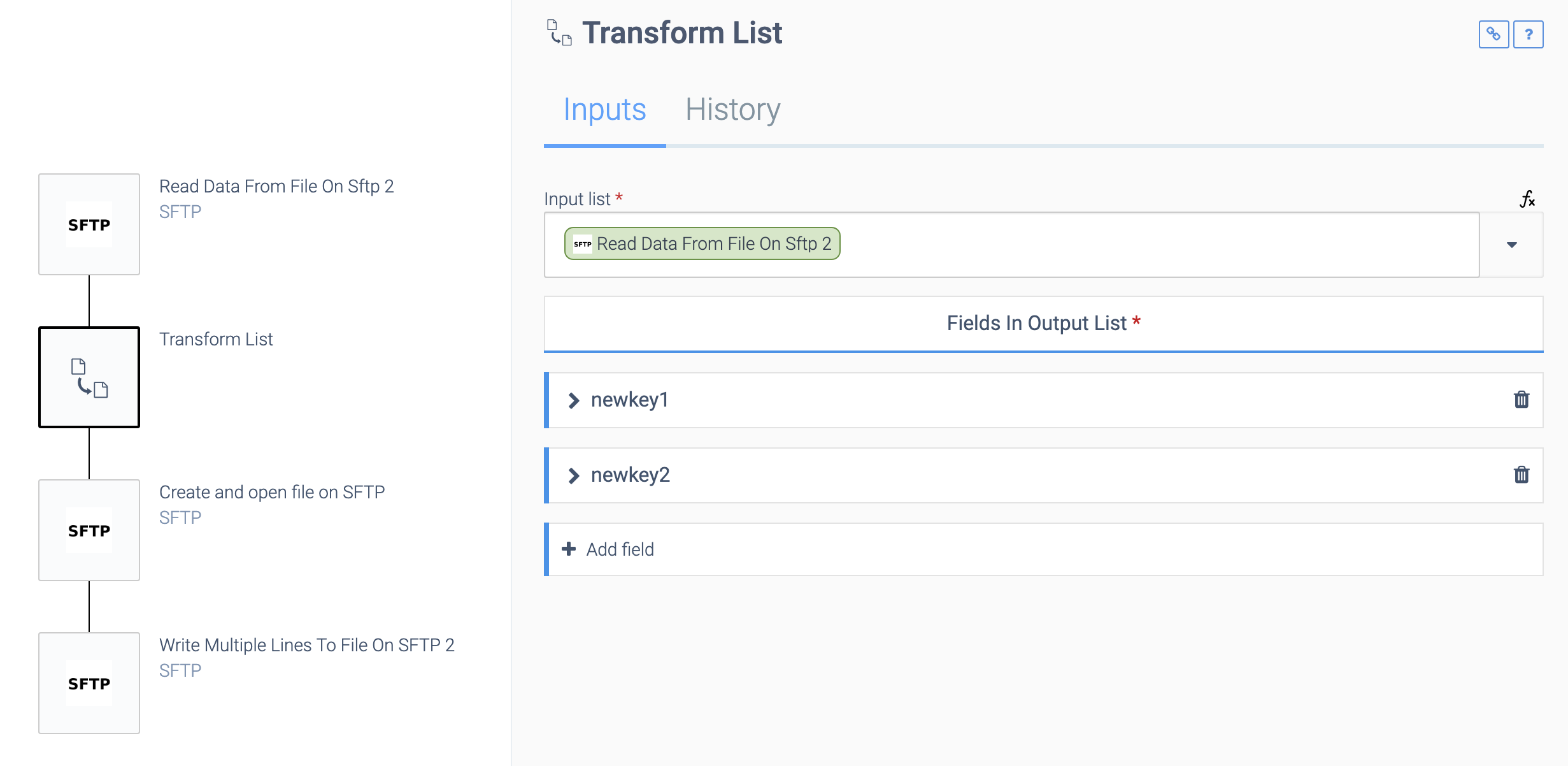

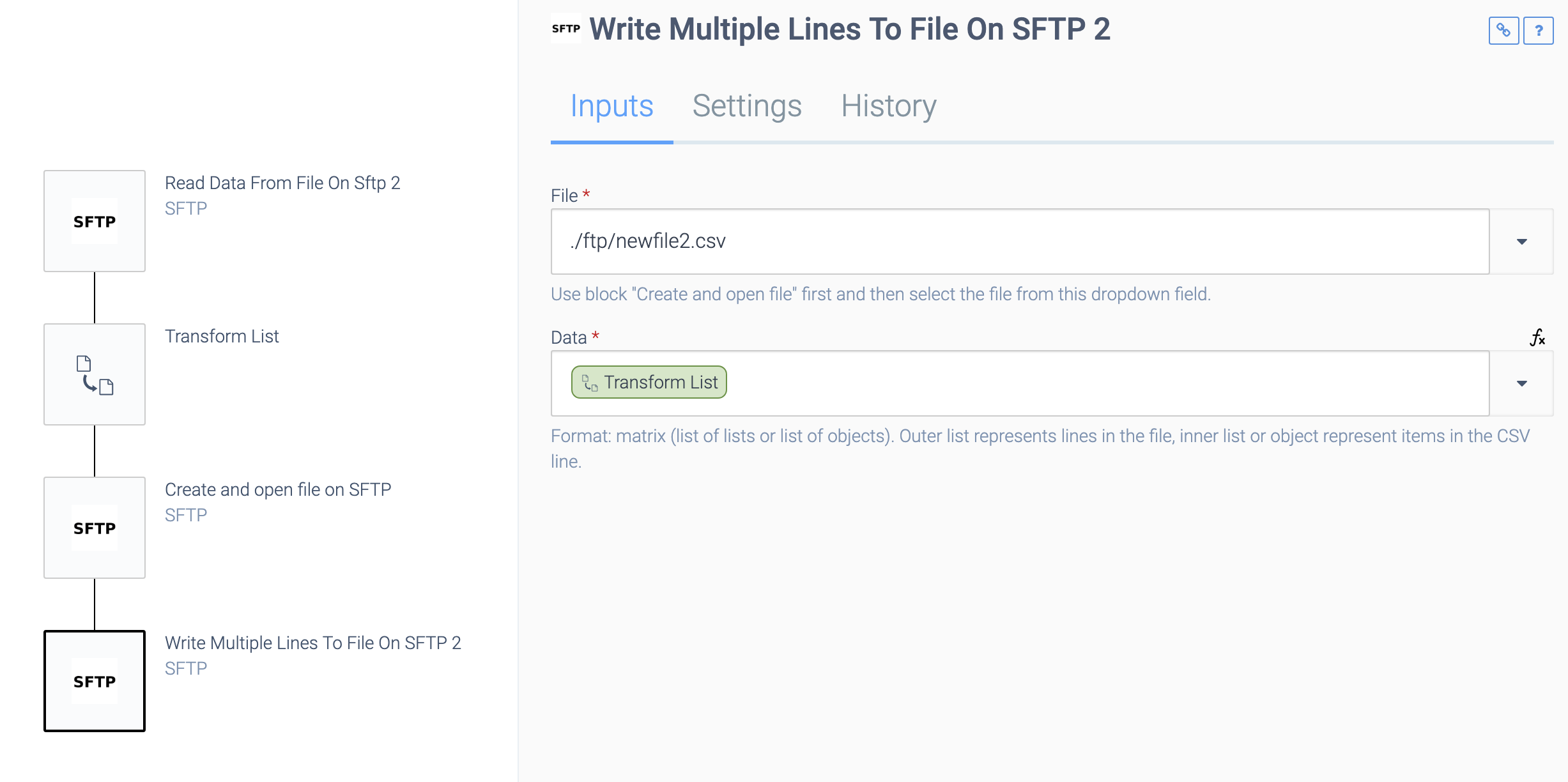

Here's an example automation that processes all the data from a CSV file at once, and writes it to a new file:

Processing all data at once.

Writing multiple lines at once.

This will work for large amounts of data since the blocks Read data from file

, Transform list

and Write to file

all use an iterator. This means that the full list will not be read into memory at once. Instead the input list (from the CSV file) will be iterated using an iterator, and the output list will not be created until the data is required.

In the above example automation, this means that the transformed list will be created as the data is written to the destination CSV file, using an iterator.

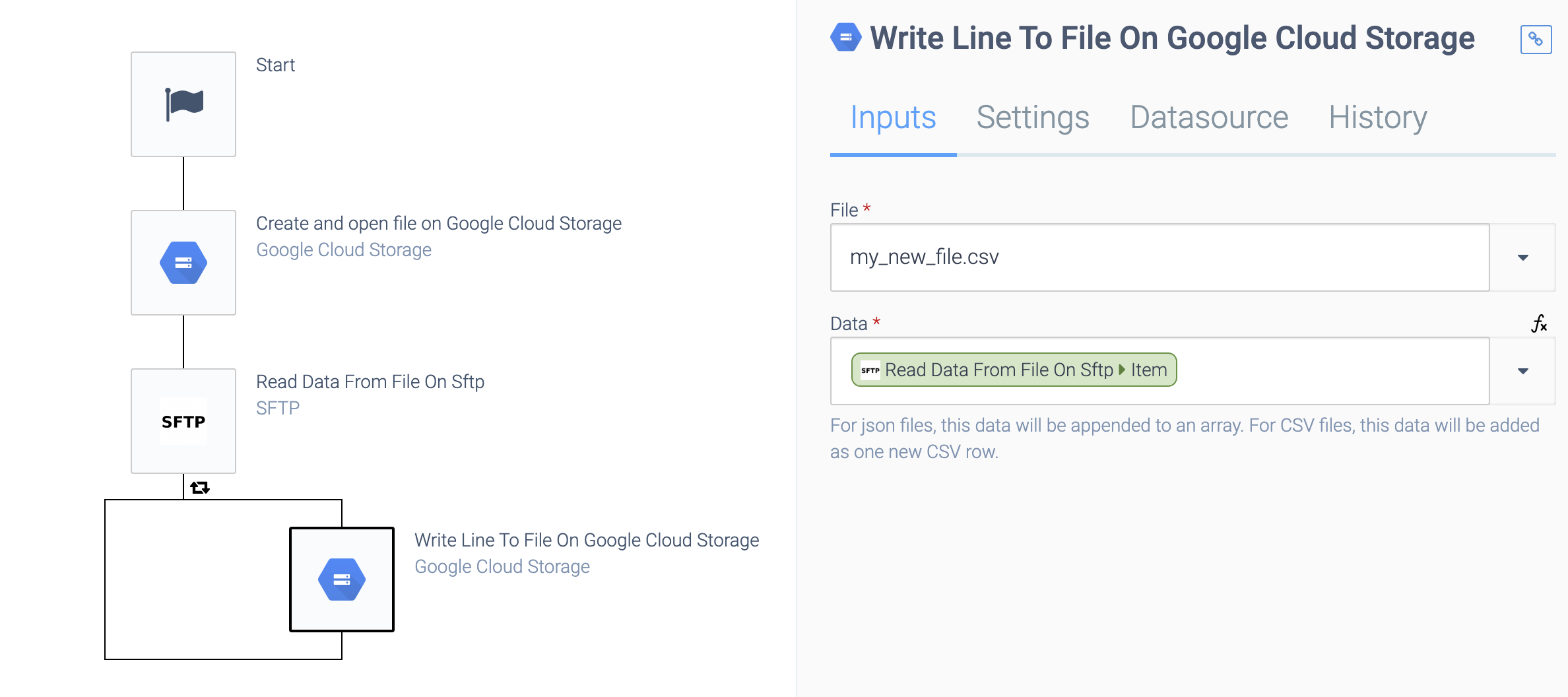

Here's another example that will also use an iterator, meaning it will work for (very) large files. A file is read from SFTP and created on Google Cloud Storage:

Writing from a file using an iterator.

Note that the block Write line to file

for Google Cloud Storage will use an internal buffer and write the data in larger chunks, so the above automation will work efficiently for large files.

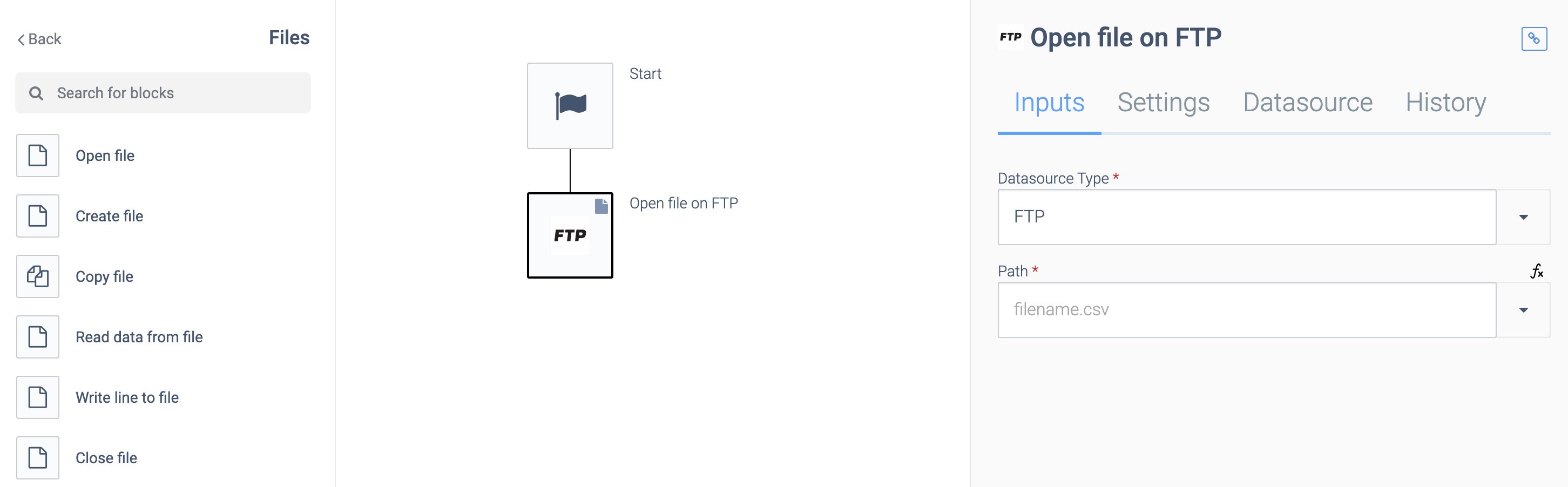

Reading and writing from binary files

Binary files are handled through the generic File blocks, not the (S)FTP connectors. Click on Files in the top left corner of the automation editor and select the Open file

block. Select FTP or SFTP as the datasource:

Reading from a binary file.

The generic file connector can handle both text files and binary files (e.g. PDF or PNG files). You can use this connector to stream very large files from a source to a destination, e.g. you could copy a file of 1TB from FTP to Amazon S3, Dropbox or other support cloud storage services.

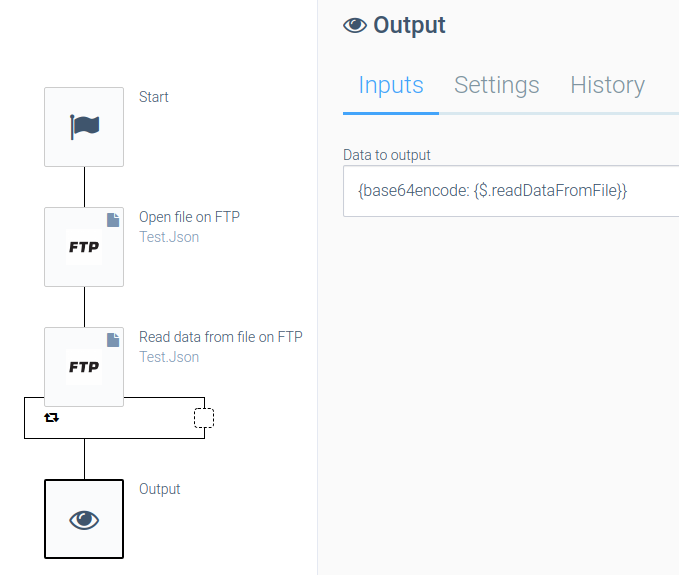

Base64 encoding

Use the formula base64encode

to convert the binary content to a base64 string representation:

Using base64encode.

This is useful if the file is e.g. a PDF that needs to be uploaded to a REST API. A common scenario is reading PDF invoices from FTP and uploading to an accounting platform.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!