Example – Training models with automated machine learning

In this example, you will train machine learning models using intelligent model optimization. With intelligent model optimization, AutoML handles the process of iterating and refining your models for you.

For more information about intelligent model optimization, see Intelligent model optimization.

What you will learn

In this example, you will learn:

-

How to create and configure an ML experiment

-

How intelligent optimization can provide automatic model refinement

-

How to view and analyze training results

Other considerations

Intelligent model optimization helps greatly with refining models given a well-prepared dataset. To ensure your models are of high quality in real-world use cases, it is essential that you start by following the structured framework and prepare a training dataset with relevant features and data. For more information, see:

Intelligent model optimization can optionally be turned off for each experiment version. When you turn this setting off, you are manually optimizing your models. Manual optimization can be helpful if you want to make specific adjustments to the experiment configuration. You could run a version with intelligent model optimization, then turn it off to make small manual tweaks while still benefiting from the automatic refinement that it provides.

This example covers experiment training with intelligent optimization. For a full tutorial showing how to use manual optimization, see Tutorial – Generating and visualizing prediction data. The tutorial also provides end-to-end guidance in deploying models, making predictions, and visualizing prediction data with interactive Qlik Sense apps.

Who should complete this example

You should complete this example to learn how to use intelligent model optimization to refine your machine learning models.

To complete this example, you need the following:

-

Professional or Full User entitlement

-

Automl Experiment Contributor security role in the tenant

-

If you are working in a collaborative space, the required space roles in the spaces where you will be working. See: Managing permissions in shared spaces

If you are not able to view or create ML resources, this likely means that you do not have the required roles, entitlements, or permissions. Contact your tenant admin for further information.

For more information, see Who can work with Qlik AutoML.

What you need to do before you start

Download this package and unzip it on your desktop:

AutoML example - Intelligent model optimization

The package contains the training dataset you will use to train models. The dataset contains information about customers whose deadline for renewal has passed, and have made the decision to churn or remain subscribed to the service.

Do the following:

-

Open the Analytics activity center.

-

Go to the Create page, select Dataset, and then select Upload data file.

-

Drag the AutoML Example - Churn data - training.csv file to the upload dialog.

-

Select a space. It can be your personal space or a shared space if you want other users to be able to access this data.

-

Click Upload.

Now that the dataset is uploaded, you can proceed to creating an experiment.

Part 1: Create an experiment

Do the following:

-

Go to the Create page of the Analytics activity center and select ML experiment.

-

Enter a name for your experiment, for example, Intelligent optimization example.

-

Optionally, add a description and tags.

-

Choose a space for your experiment. It can be your personal space or a shared space.

-

Click Create.

-

Select the AutoML Example - Churn data - training.csv file.

Part 2: Configure the experiment

Next, we can configure the experiment.

Intelligent model optimization requires less initial configuration than manual optimization. In this case, we will select a target and use all features that are included by default.

Selecting the target

We want our machine learning model to predict customer churn, so we select Churned, the final column in the dataset, as our target.

In the experiment, the Data tab should be the only tab shown. You can select a target in multiple ways, but here we use the Schema view, which has opened by default.

Do the following:

-

In the schema, hover over Churned and click the target

icon that appears.

Selecting the target in Schema view

Confirming feature selection

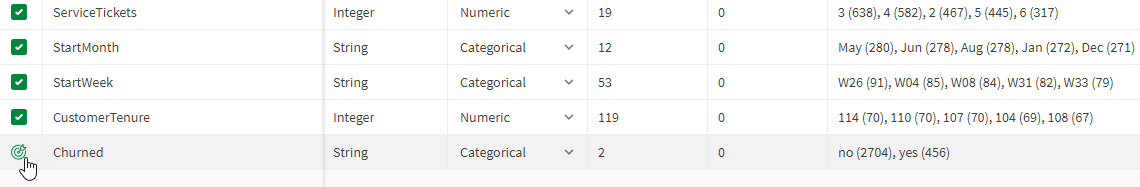

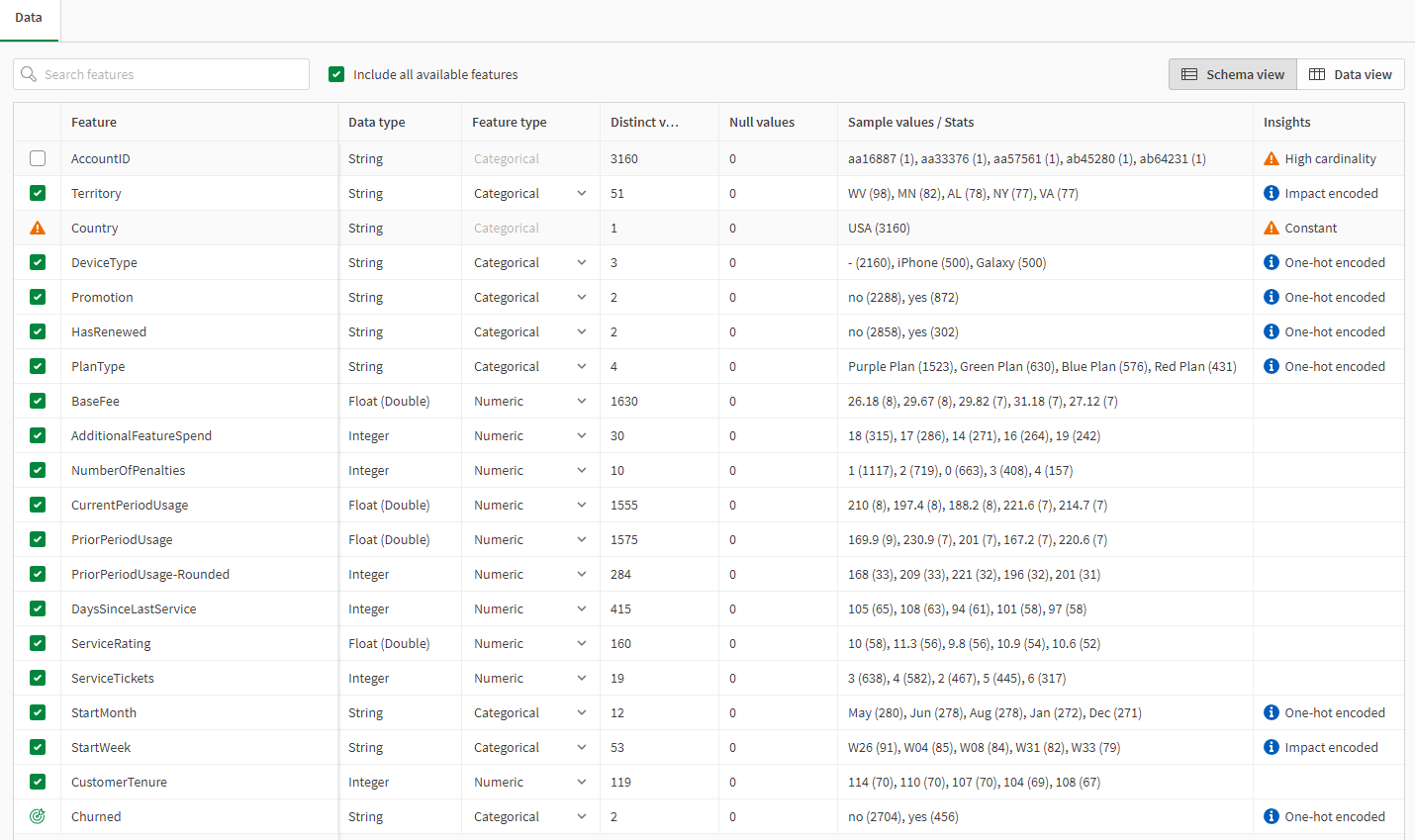

After you select a target, all available and recommended features are included by default. In Schema view, confirm that all but two features are included. There should be filled check boxes next to each included feature. Country is not available for use. AccountID is not recommended for use due to high cardinality, so we leave it unselected.

Schema showing default feature selection

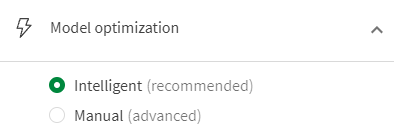

Confirming intelligent optimization

A panel should be open on the right side of the page to configure additional settings. We want to check that intelligent model optimization is turned on.

Do the following:

-

If the experiment configuration panel is not open, click

View configuration to open it.

-

In the panel, expand Model optimization.

-

The selected optimization option should be Intelligent.

Confirming the experiment version is running with the Intelligent optimization option

Run the training

Click Run experiment in the bottom right corner of the page to begin the model training.

Part 3: View the results

After training is completed, the Models tab appears and opens. This is where you can view what optimizations were performed during training. The top model, marked with a icon, is automatically selected. Let's analyze this model.

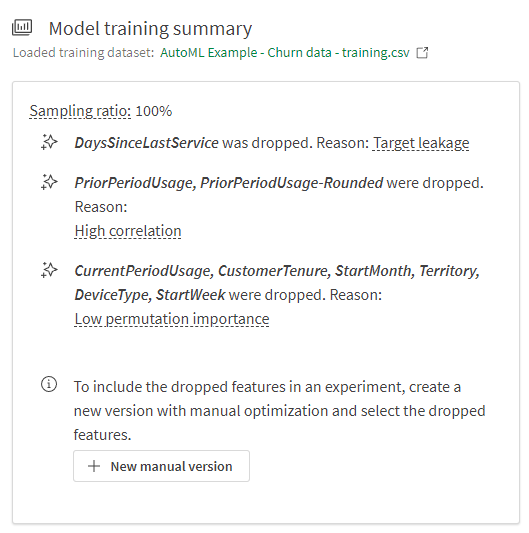

Look at the Model training summary. This shows you the results of the intelligent optimization for this model. In this case, we can see that the following features were dropped, and the reason for their removal is provided:

-

DaysSinceLastService was dropped due to suspected target leakage. In this case, the column contained data with improper logic. The days since last service ticket were still actively being counted for customers who have canceled their service (in some cases, years ago). This feature needed to be removed because it would have given the model false performance scores and would have caused the model to perform very poorly if deployed. See Data leakage

-

PriorPeriodUsage and PriorPeriodUsage-Rounded were dropped because they were too highly correlated to another feature. The feature with which they are correlated was still included in the training. See Correlation.

-

CurrentPeriodUsage, CustomerTenure, StartMonth, Territory, DeviceType, and StartWeek were all dropped due to low permutation importance. Features that have low impact on the model are seen as statistical noise and can be removed to improve performance. See Understanding permutation importance.

Model training summary chart showing the features that were dropped with intelligent optimization

Now that these features are removed, we can see visualizations showing the most influential features, as well as some indicators of the model's predictive performance. What you see in these charts can help you assess if something is missing in the feature set, or if the results are skewed.

For more information about analyzing models with these visualizations, see Performing quick model analysis.

Dive deeper into analysis

If you want to further explore the model metrics, switch to the Compare and Analyze tabs in the experiment. These tabs give you a more granular, interactive view of the metrics.

For more information, see Comparing models and Performing detailed model analysis.

Next steps

With a high-quality dataset, intelligent optimization creates ready-to-deploy models with little to no further iteration required. From this point, it is recommended that you deploy the top-performing model. Otherwise, you can continue refining the models manually, or update the training data and run intelligent model optimization again.

For more information on next steps, see:

Thank you!

You have completed this example. We hope that you have learned how you can use intelligent model optimization to train ready-to-deploy machine learning models with ease.

Further reading and resources

- Qlik offers a wide variety of resources when you want to learn more.

- Qlik online help is available.

- Training, including free online courses, is available on Qlik Learning.

- Discussion forums, blogs, and more can be found in Qlik Community.

Your opinion matters

We appreciate any feedback that you may have. Please use the section below to let us know how we're doing.