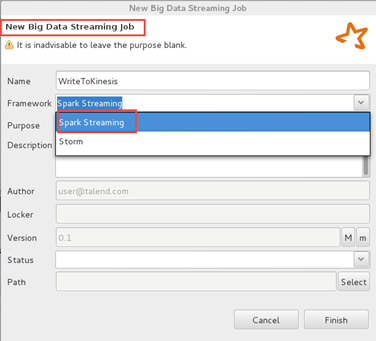

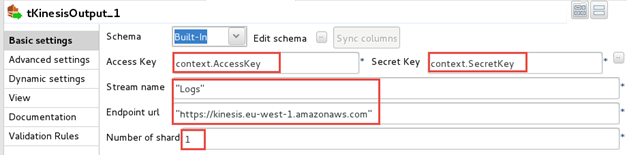

Writing data to an Amazon Kinesis Stream

Before you begin

In this section, it is assumed that you have an Amazon EMR cluster up and running and that you have created the corresponding cluster connection metadata in the repository. It is also assumed that you have created an Amazon Kinesis stream.