New features

Application integration

| Feature | Description |

|---|---|

| Support for OAuth connection to Kafka Registry in cKafka component | Talend Studio

now supports the OAuth 2.0 authentication to connect to Kafka Registry in the

cKafka component.

|

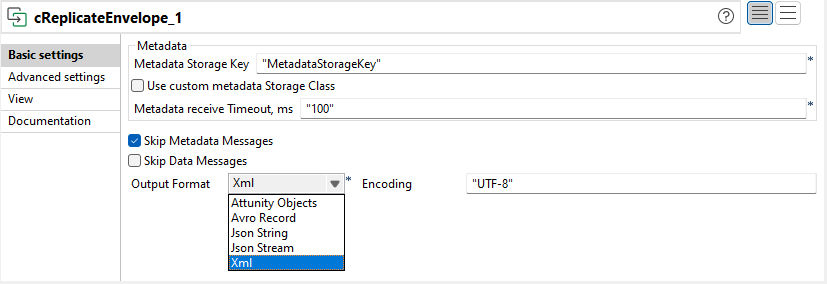

| Support for inline selection of Avro decoder and output format choice in cReplicateEnvelope component | The cReplicateEnvelope component is enhanced with:

|

| Support for Jetty server and access log configuration for Microservices | New parameters are added in the properties file of Microservice to configure Jetty server and access logs. For more information, see Running a Microservice. |

Big Data

| Feature | Description |

|---|---|

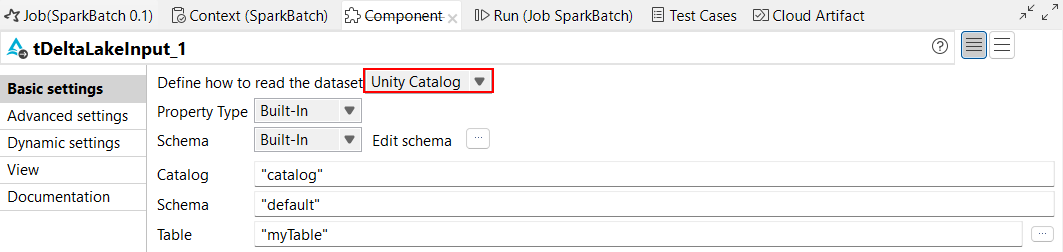

| Support for Delta Lake managed tables with Unity Catalog | You can now use Unity Catalog to work with managed tables in tDeltaLakeInput

and tDeltaLakeOutput components. With Unity Catalog, you can also analyze your data using data lineage. Note that data lineage is only supported for components using DS and not RDD. For more information, see Working with Databricks managed tables using Unity Catalog.

|

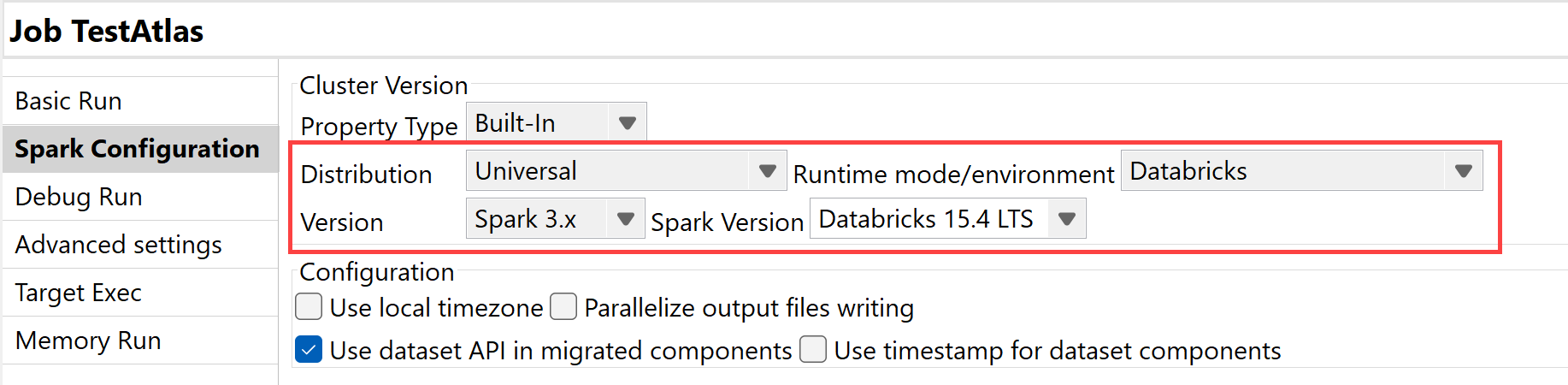

| Support for Databricks 15.4 LTS with Spark Universal 3.x |

You can now run your Spark Batch and Streaming Jobs on job and all-purpose Databricks clusters on Google Cloud Platform (GCP), AWS, and Azure using Spark Universal with Spark 3.x. You can configure it either in the Spark Configuration view of your Spark Jobs or in the Hadoop Cluster Connection metadata wizard. When you select this mode, Talend Studio is compatible with Databricks 15.4 LTS version.

|

Data Integration

| Feature | Description |

|---|---|

|

New tClaudeAIClient component to use Claude models in Standard Jobs |

The new tClaudeAIClient component is now available in Standard Jobs, and allows you to leverage Claude models and their different capabilities. |

|

Support for vector search capabilities for Snowflake components in Standard Jobs |

The Use vectors option has been added to tSnowflakeOutput, tSnowflakeOutputBulkExec, and tSnowflakeBulkExec components, allowing you to use vector search capabilities. |

Data Mapper

| Feature | Description |

|---|---|

| Support for array items and map entries in the SET clause in DSQL maps | In DSQL maps, you can now declare a SET variable with an

array item:You can also now declare a

SET variable with a map

entry:

|