Evaluating the classification model

After you created a classification model, you can evaluate how good it

is.

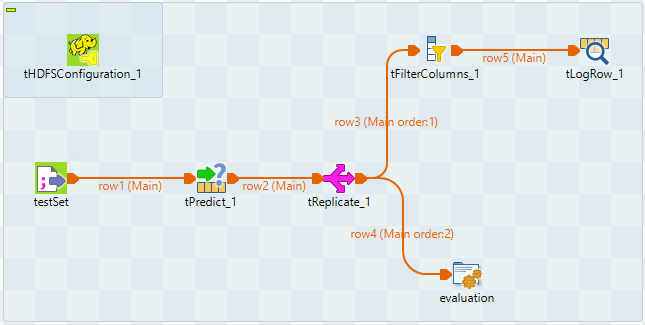

Linking the components

Procedure

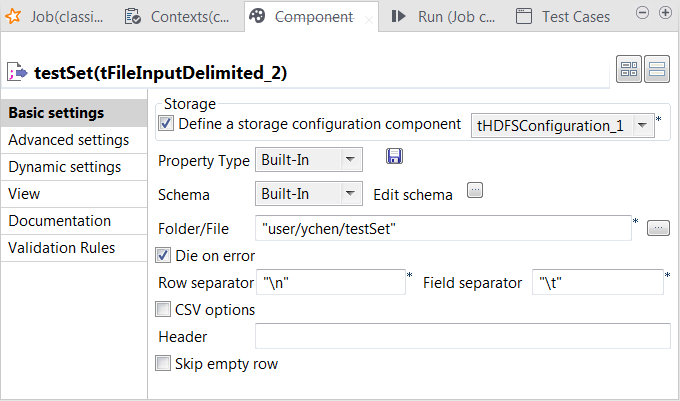

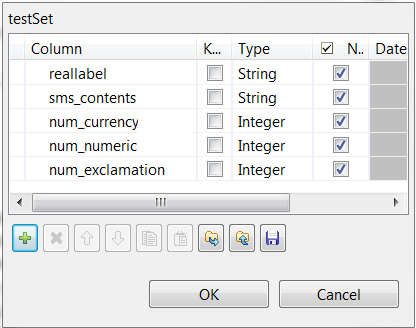

Loading the test set into the Job

Procedure

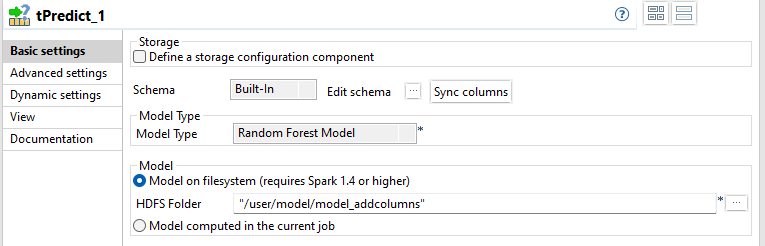

Applying the classification model

Procedure

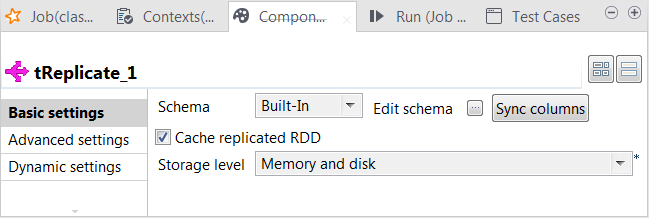

Replicating the classification result

Procedure

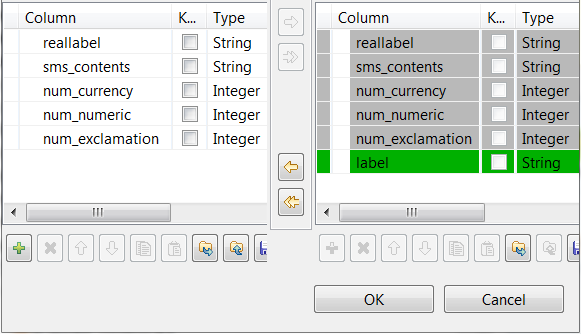

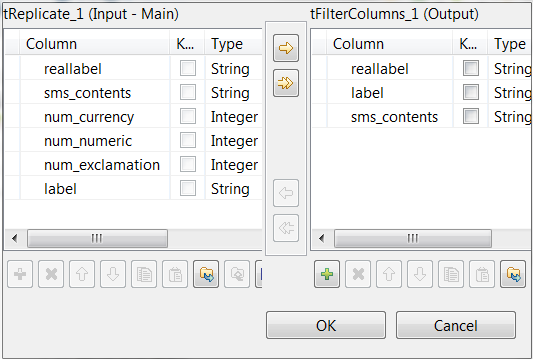

Filtering the classification result

Procedure

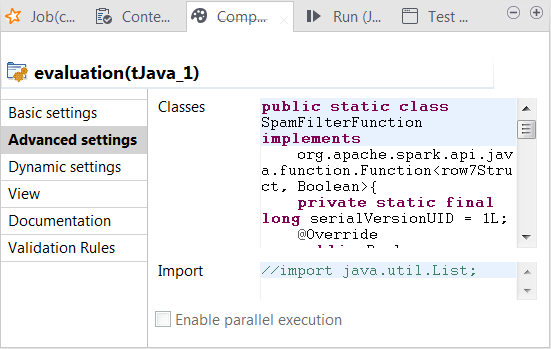

Writing the evaluation program in tJava

Procedure

Configuring Spark connection

About this task

Repeat the operations described above. See Selecting the Spark mode.

Executing the Job

Procedure

Results

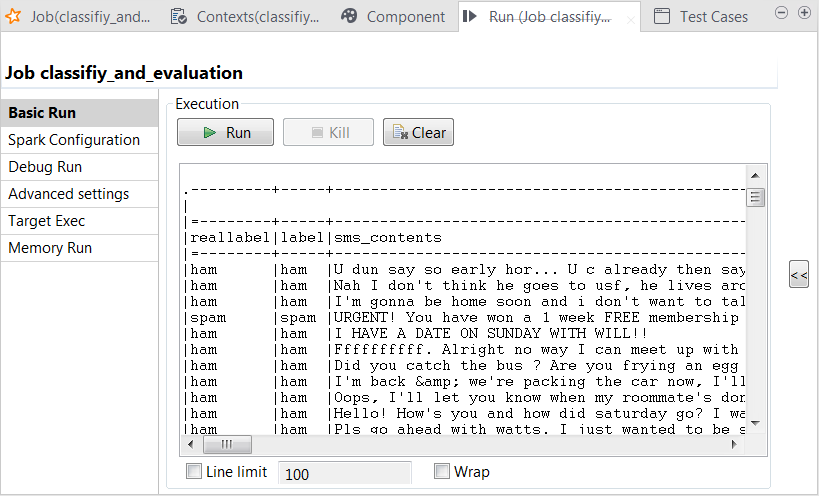

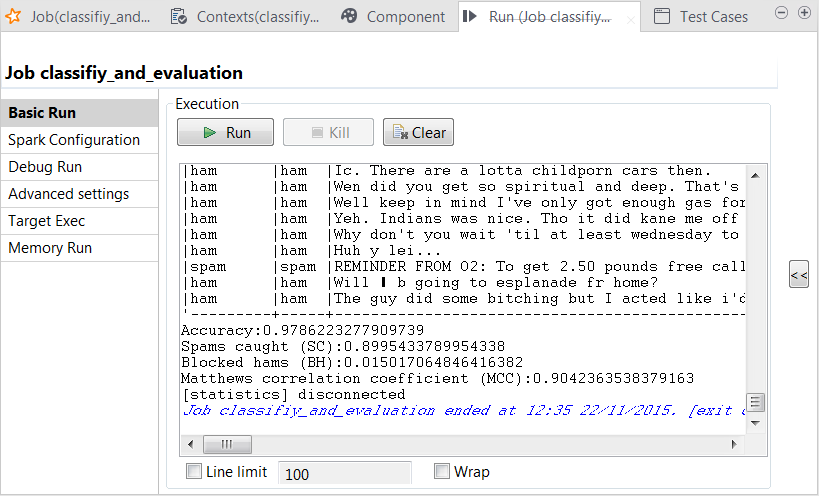

In the console of the Run view, you can read the classification result along with the actual labels:

You can also read the computed scores in the same console:

The scores show a good quality of the model. You can still enhance the model by continuing to tune the parameters used in tRandomForestModel and run the model-creation Job with new parameters to obtain and then evaluate new versions of the model.