New features

Application integration

| Feature | Description |

|---|---|

| Support for Prometheus endpoint activation through Microservice configuration | You can now use the management.endpoint.prometheus.enabled=true/false parameter in an additional properties file to activate or deactivate the Prometheus endpoint for a Microservice. For more information, see Running a Microservice. |

| Support for Batch Consumer and partitioning in cKafkaCommit component | cKafkaCommit now supports Batch Consumer and use of partitions. |

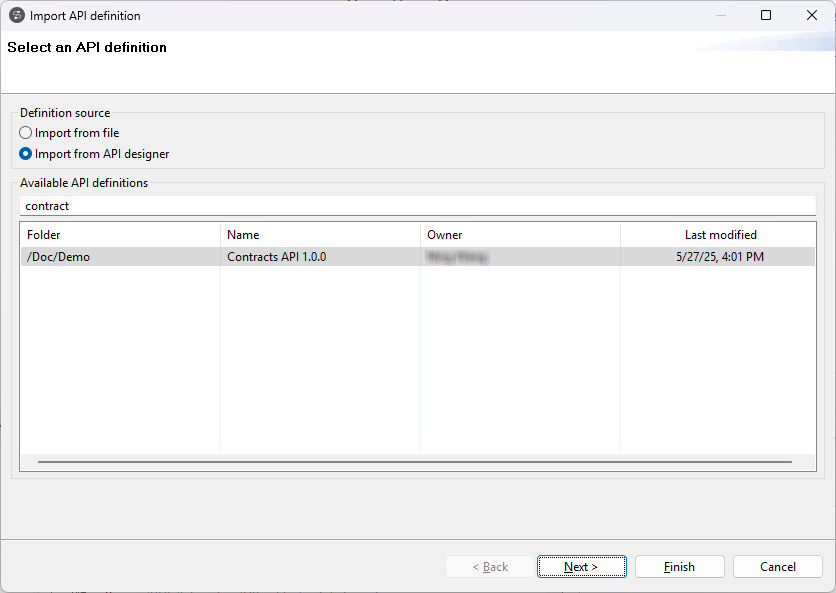

| Display the folder structure of API definitions in API import wizard | When importing an API definition from Talend Cloud API Designer, the API import wizard now reflects the folder structure of Talend Cloud API Designer. For more information, see Creating a new REST API metadata from API

Designer.

|

Big Data

| Feature | Description |

|---|---|

| Support for CDP Private Cloud Base 7.1.7 with Spark Universal 3.3.x | Talend Studio now supports CDP Private Cloud Base 7.1.7 (Spark 3.2) SP1 with JDK 11 with Spark Universal 3.3.x in Spark Batch and Standard Jobs. |

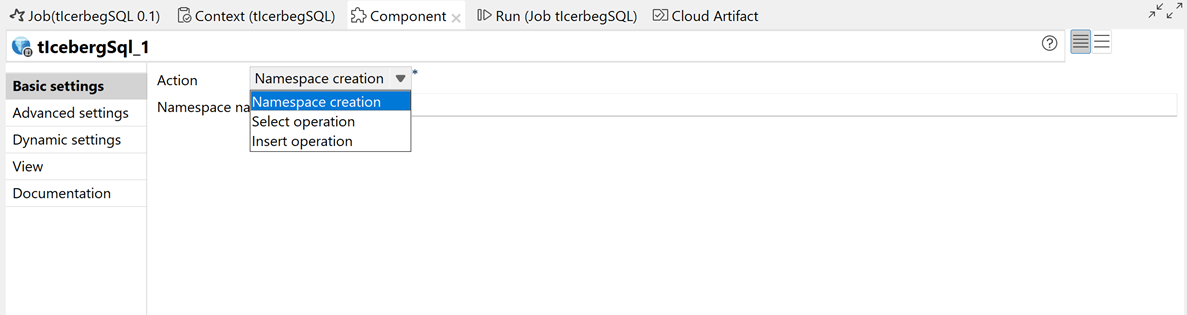

| New tIcebergSQL component in Spark Batch Jobs |

The new tIcebergSQL component is now available in Beta version in Spark Batch Jobs allowing you to execute SQL queries for Polaris and Snowflake Open Catalog managed Iceberg tables.

|

| Support for Hive 3 in Impala components | Talend Studio now supports Hive 3 in Impala components in Standard Jobs. |

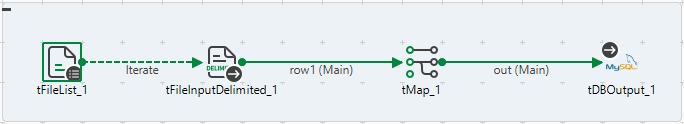

Data Integration

| Feature | Description |

|---|---|

|

New tQVDOutput component in Standard Jobs |

The new tQVDOutput component is now available in Standard Jobs, and allows you to create QVD files. |

|

New partition options in tKafkaInput in Standard Jobs |

The Use offset partition option has been added to tKafkaInput, allowing you to specify the partition to read from, and define the starting offset for message retrieval. |

Data Mapper

| Feature | Description |

|---|---|

| Support for EXISTS operator in DSQL maps | The EXISTS operator is now available in DSQL maps allowing you to check if an argument is not empty nor null. The argument can be an array, a record, a primitive, a choice or a map. |

| Support for arithmetic operation between string operands representing numbers | Arithmetic operations like '2' + '1' are now calculated and supported as numeric string operands. |

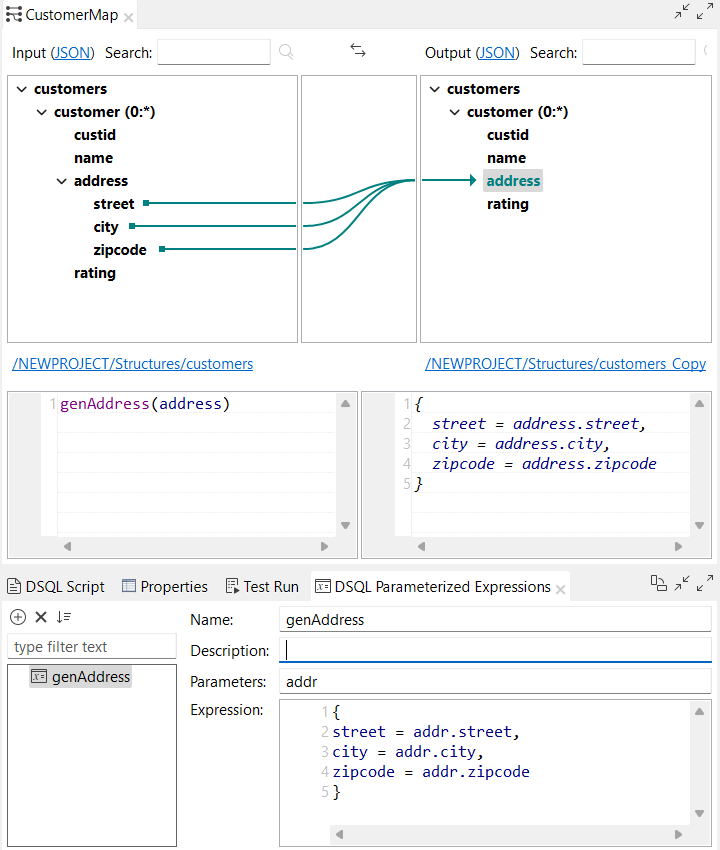

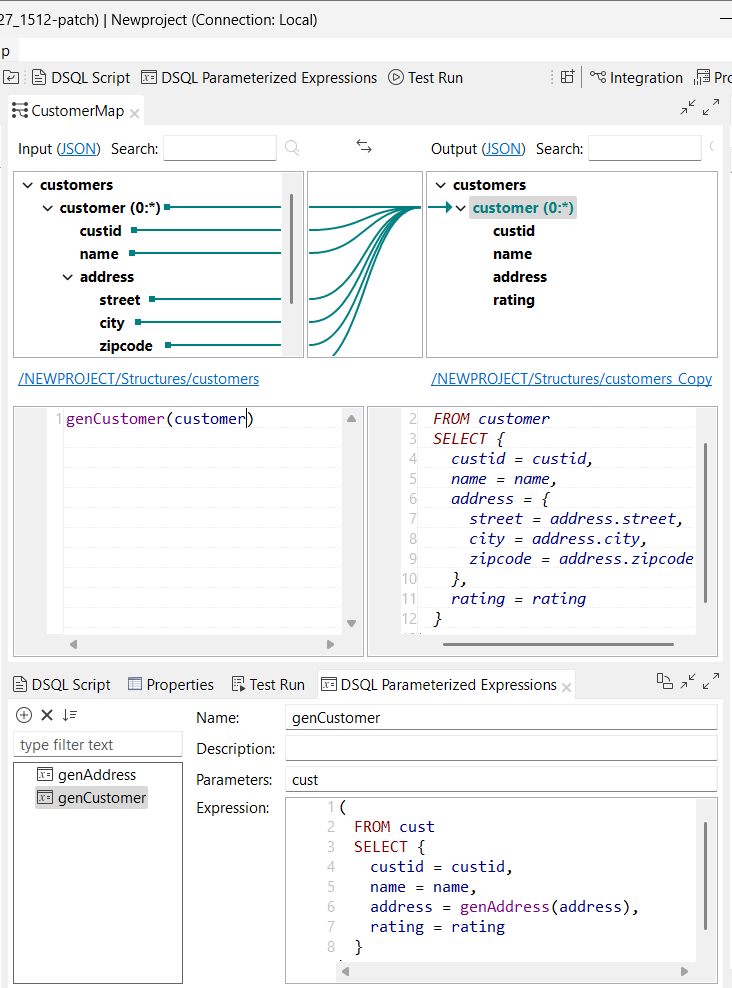

| Support for complex parameterized expressions in DSQL maps | Talend Studio

now supports complex parameterized expressions in DSQL map editor. You can now use

a block in a parameterized expression:

And you can also use a query in a parameterized expression:

|

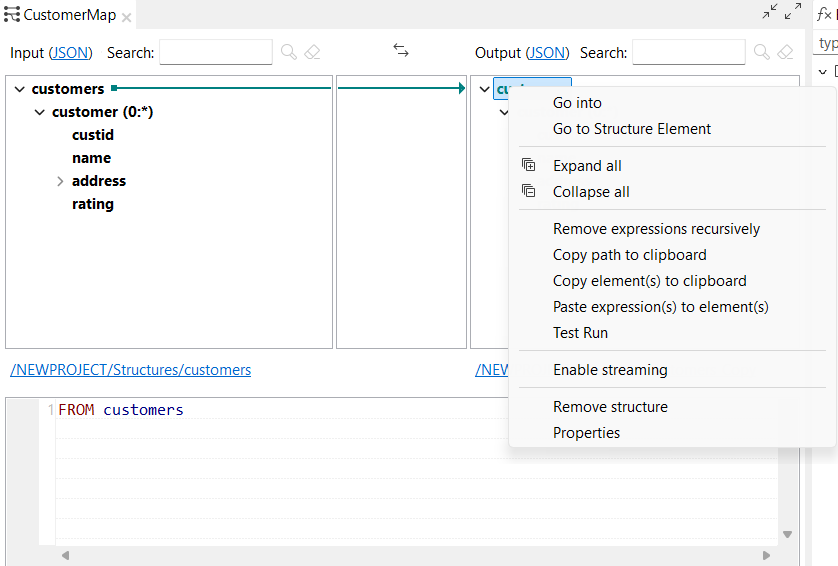

| New options to copy and paste expressions in DSQL maps | You now have the possibility to copy and paste expressions when you

right-click an output element in the DSQL map editor.

|

| Support for custom start index value in queries in DSQL maps | You can now use a custom start index value in your queries in DSQL maps by

defining an expression after the INDEX keyword. For example, you

can define your index to start at

1: |

| Support for the NOT operator in front of conditional expressions in DSQL maps | The NOT and ! operators can now be

followed by a conditional expression: |

Data Quality

| Feature | Description |

|---|---|

| Support for new authentication methods for Snowflake connection | When connecting to Snowflake as a database connection on the repository, you can now use the key-pair and OAuth 2.0 authentication methods. |