Processing large lookup tables

This article shows a specific use case and discusses a way to handle the problem in Talend Studio.

In this scenario, you have a source table with hundreds of millions of records in the table, and this input data is used to look up data from a table which also has hundreds of millions of records in a different database. The source data combined with the lookup data will be inserted or updated into a target table.

Assumptions

- The source table and the lookup table have a common column that can be used in the join condition.

- Source and lookup tables are in different RDBM systems.

Problem Description

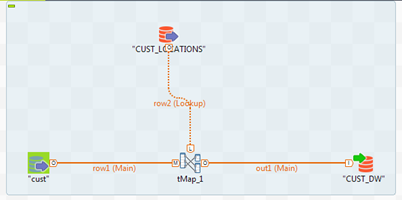

You have a simple Job which reads source data from the cust table and looks up the CUST_LOCATIONS table using the location_id column. This is being done in tMap.

If you run this Job, it runs out of memory while trying to load the whole lookup data (70 million rows) into memory. This Job works for small loads, but if you have hundreds of millions of records in the source table and the lookup table, it is very inefficient.

It is recommended to set the Store temp data to true if you have large lookups.

But it is still not enough in this scenario.