Batch processing large lookup tables

This article explains how to use batch processing to handle large lookup tables.

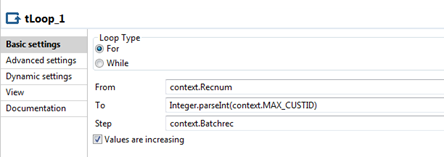

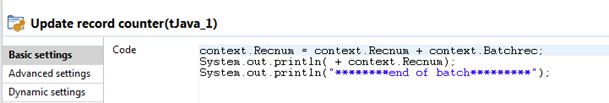

One way to approach this issue is to use a process called Batching. Batching allows a batch of the records to be processed in a single run, which is done iteratively to create more batches and process all the records.

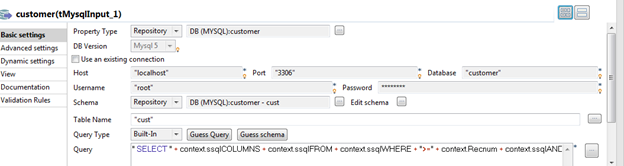

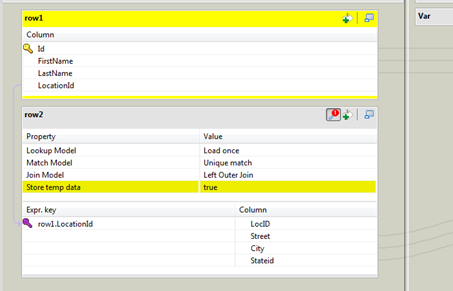

Each iteration will process and extract a fixed number of records from the source and the lookup tables, perform a join and load the target table.

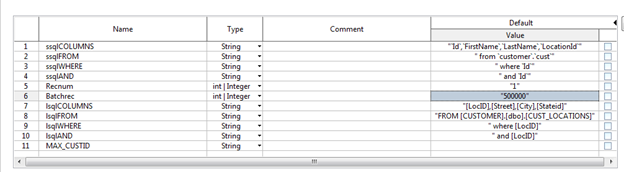

This way you can control the number of records (batchrec variable) that the process holds in the memory.

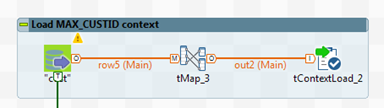

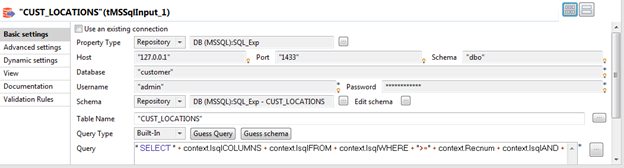

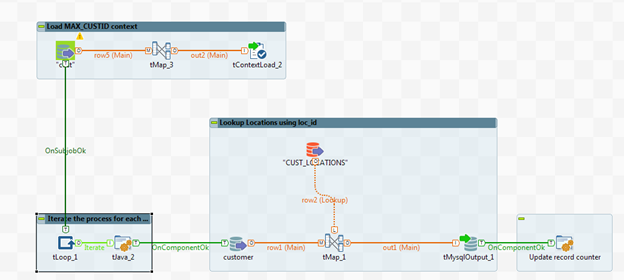

To do this you can use Context variables, tLoop component, tContextLoad component, tMap and tJava component.

Procedure

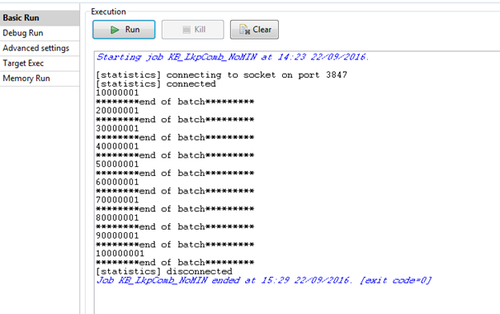

Results

Here is the log from a sample run where the source table (cust) has 100 million rows and the lookup table (CUST_LOCATIONS) has 70 million rows. Batchrec="10000000".

The Job took 66 minutes to run and ran within the memory available on the execution server. It did not get impacted with out of memory exceptions.