Data transformation

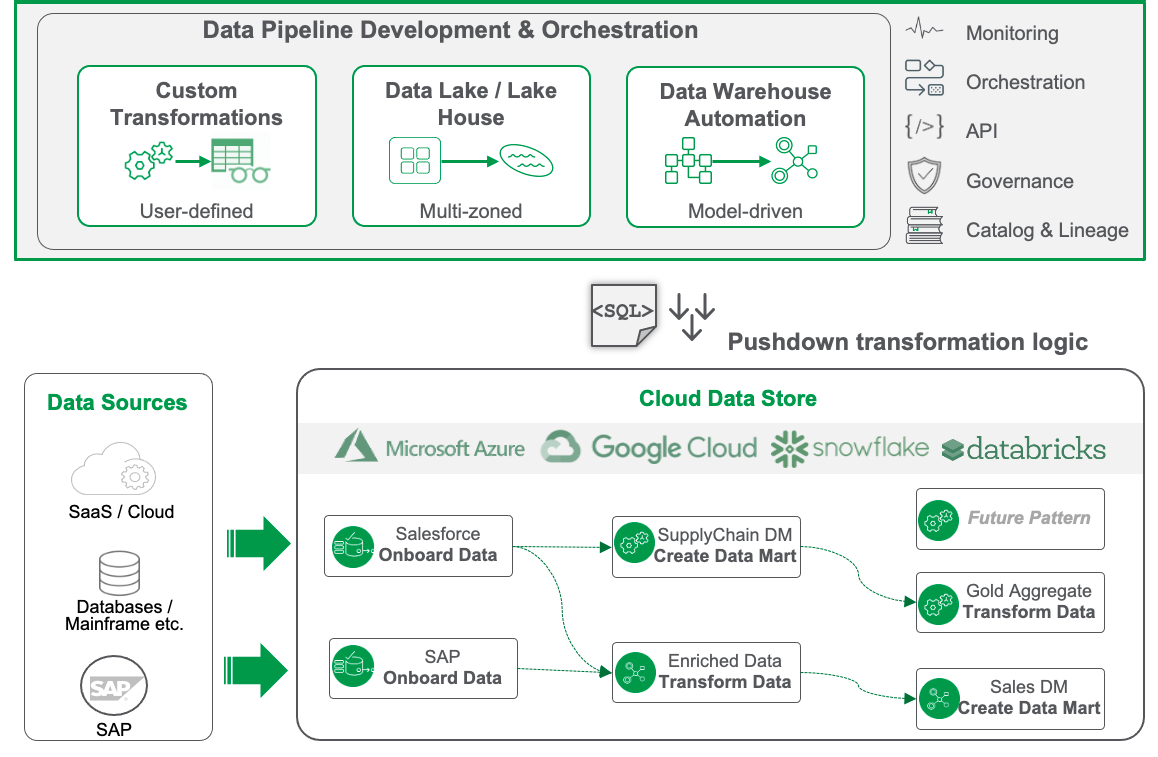

The data transformation service in Qlik Talend Data Integration provides ELT capabilities to cloud data warehouses and data lakes. It is a key part of Qlik's vision to provide our customers with a raw-to-ready data pipeline.

The data transformation service is:

Cloud-based: Customers design, deploy, and monitor data pipelines in Qlik Cloud with transformations executed via SQL in popular cloud targets.

Flexible, template-driven approach: Customers can define reusable transformations, set policies, and create custom design templates to simplify and accelerate the development of their data projects. Schema evolution is supported to remove the need to recreate pipelines and tasks as your source systems evolve.

Automated: Automation of common methodologies and DataOps best practices allow customers to operationalize their transformation workloads quickly and reliably.

Integrated: Pipeline tasks are integrated with the data movement service to transform data in near real-time to analytics-ready data in your target cloud platform.

The data transformation service contains the following functionality:

Creation of flexible, fit-for-purpose data pipelines

Rule-based, row-level transformations

Creation of new derived objects based on:

Source to target mappings

Visual transformation designer

AI-assisted transformations

Custom SQL for more complex logic

Automated generation of star schema data marts

Defined logical relationships between data sets

Choice to materialize data sets as tables or create as views

CDC (change data capture) support for low latency and incremental data movement

Push-down SQL execution to cloud DW platforms (Snowflake, Azure Synapse, Google BigQuery, Microsoft SQL Server)

Version control integration

Visual transformations with transformation flows

The transformation flow designer allows you to create a transformation flow visually, using sources, processors, and targets to define complex or simple transformations. Processors allow you to perform common ELT operations in a visual no-code environment.

Flow processors allow you to perform common transformation tasks such as Joins, unions and aggregation, filtering and many more.

The transformation capabilities also offer advanced data cleansing capabilities. Businesses can identify and correct data quality issues such as missing values, duplicate entries, and inconsistencies. This helps ensure that their data is accurate and reliable, leading to better insights and decision-making.

If you need to do something that a visual transformation can't do, you can feed one transformation as a source to another, which could add advanced logic without to the need to hand-code all the logic.

SQL transformations

Where you need to perform operations not supported by visual transformations, SQL transformations can be used. SQL transformations use select statements to transform the data into your required output. The SQL syntax is determined by the target platform, so exact functionality varies by platform.

AI-assisted SQL transformations

AI-assisted transformations use the metadata from your dataset, combined with your plain english request, to generate a SQL transformation with generative AI (Gen-AI). This feature is disabled by default and is dependent of the availability of Gen-AI features in your chosen region. You can then choose to use the generated transformation as-is, or modify it. It is important to review the generated SQL to ensure Gen-AI has correctly interpreted your request. AI-assisted transformations are aware of the underlying target so will generate SQL syntax specific to that target.

Third-party data transformation

Third-party data transformation in Qlik Talend Data Integration refers to the process of registering already existing data that has been landed in the chosen cloud platform by external tools (including Qlik Replicate and Talend Studio).

This means that customers can build workflows on top of and incorporate existing data into data pipelines without having to duplicate existing processes and consume the data twice. This includes to create transformation tasks, data cleansing, and data warehouse automation.

Use-cases for third party transformation include:

A temporary process during migration from legacy tools to Qlik Talend Data Integration

Leveraging an existing cloud data warehouse or data lake for a new requirement

Allowing Qlik Talend Data Integration to work with a propriety solution where connectivity is not available

Third-party transformation supports key principles of Qlik: leave the data where it is, register it, understand it, make it qualitative, and start delivering it.

Integrating your data projects with Version Control

Qlik Talend Data Integration supports Integration between your data projects and Git for version control. This allows us to manage our data project life cycles in the same way we manage other development assets. For example we could have our production data project on the "Main" branch', and work on enhancements in a Dev version of our project on the Dev branch. Data connections are not stored in the git project; they are mapped to the project through bindings. This ensures projects pick up the right connection (e.g. Dev / Test / Production).

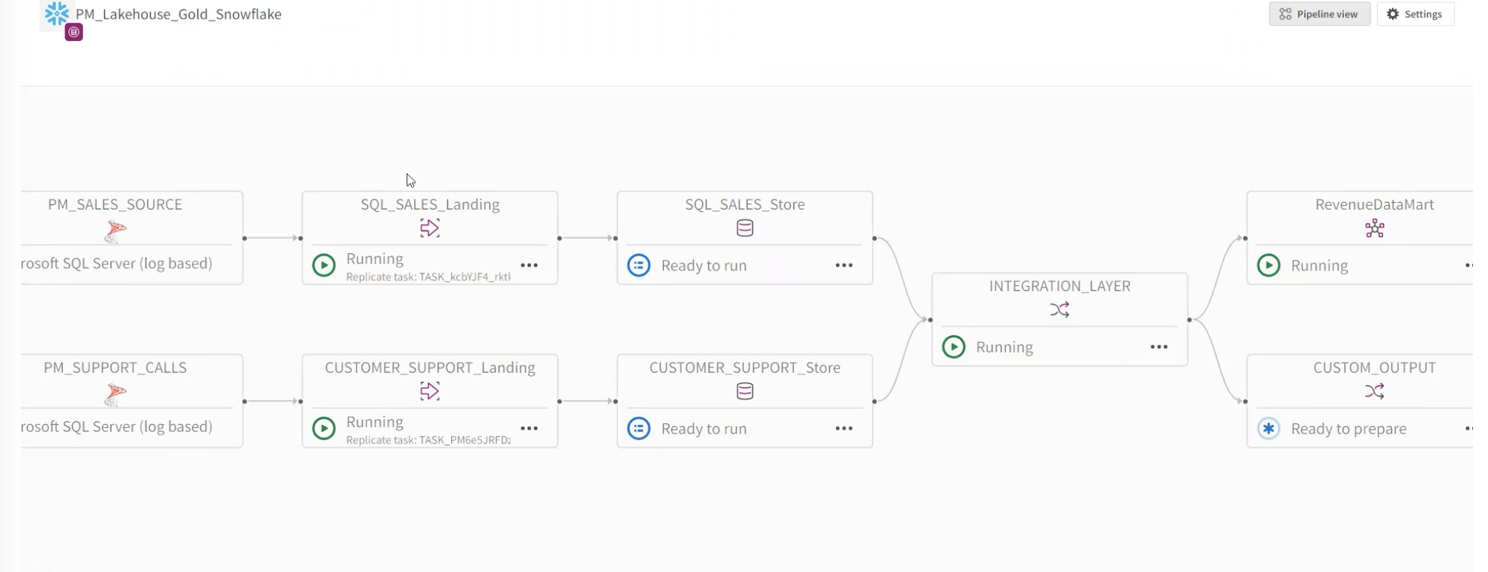

Orchestrating your Data Projects

While Qlik Talend Data Integration supports basic scheduling of most data tasks, customers sometimes need to integrate their data projects into their own workflows. To support this Qlik has released a set of REST APIs for Data Integration Projects to provide this integration with external workflows.

These APIs provide full control of Data Projects and their associated tasks and support many customer use-cases such as only running CDC at certain times, or disabling tasks during maintenance on sources and targets. It is also possible to create, import and delete projects, or change the project's configuration. Qlik will continue to expand API support for Qlik Talend Data Integration to support all customer use-cases.

Cross-project pipelines

There are times where the same logic may apply to multiple data sets. For example an organization that has multiple regional deployments of there systems may wish to re-use a pipeline to transform the data into an Enterprise Data Lake or Warehouse. To support these use-cases Qlik has introduced Cross-project pipelines. To leverage cross-project pipelines you must have Can Consume

or greater access to the pipeline and the pipeline must be for the same data platform.

Knowledge Marts

Traditionally data transformation as a discipline has been focused on two key use-cases:

- Transforming data into a format suitable to be used in downstream operational systems

- Transforming data into a format suitable for Analytics & reporting

However the emergence of Generative AI has created demand for a new type of transformation, the transformation of data into formats suitable for storing in knowledge bases to be consumed by Gen-AI systems. There is a design and curation required along with the ability to ingest the different types of data (unstructured and structured) into the same vector store. Qlik has delivered new capabilities to meet these requirements, which we call Knowledge marts.

A Knowledge Mart is a collection of curated (designed, processed) documents that are

- Domain-Specific – Focused on a particular subject, industry, or use case

- Curated & Structured – Organizes knowledge to optimize search and retrieval.

- Vectorized & Indexed – Stores embeddings in a vector database for semantic search.

- AI-Optimized – Enables LLMs to retrieve relevant, contextualized data for better responses.

- Incremental & Updatable – refreshed with new knowledge incrementally

By building Knowledge mart functionality into our Data Integration solutions customers are able to leverage the skills and experience they already have for traditional data transformation use-cases and apply these to Generative AI use-cases.