Profiling an HDFS file

From the Profiling perspective of Talend Studio, you can generate a column analysis with simple statistics indicators on an HDFS file via a Hive connection.

The sequence to create a profiling analysis on an HDFS file involves the following steps:

- Create a connection to a Hadoop cluster.

- Create a connection to a Hive server.

This step is not mandatory as you will be prompted to create the connection to Hive simultaneously while you create the connection to an HDFS file.

-

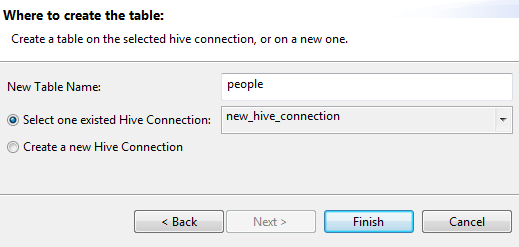

Create a connection to an HDFS file.

This step will guide you to create a Hive external table, which leaves the data in the file, but creates a table definition in the Hive metastore. This allows Talend Studio to run SQL queries on the data in the file via the Hive connection.

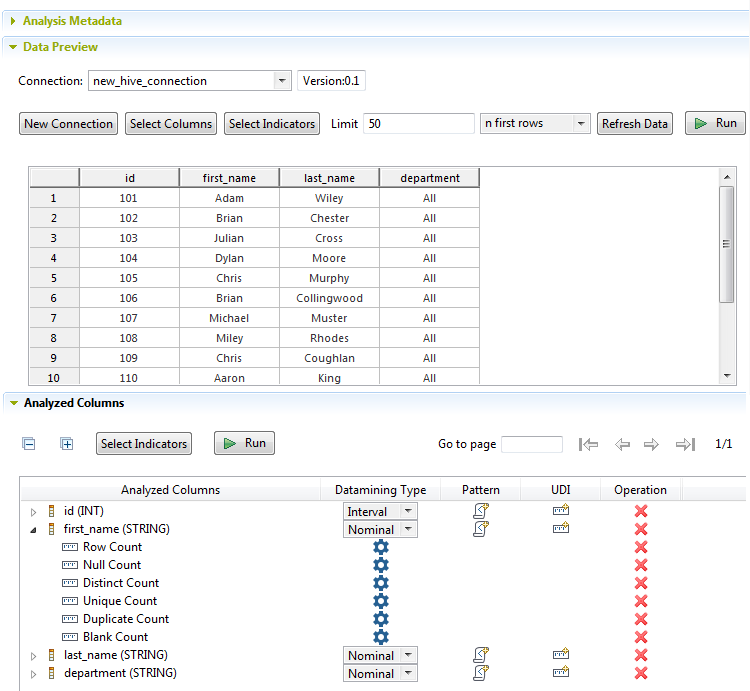

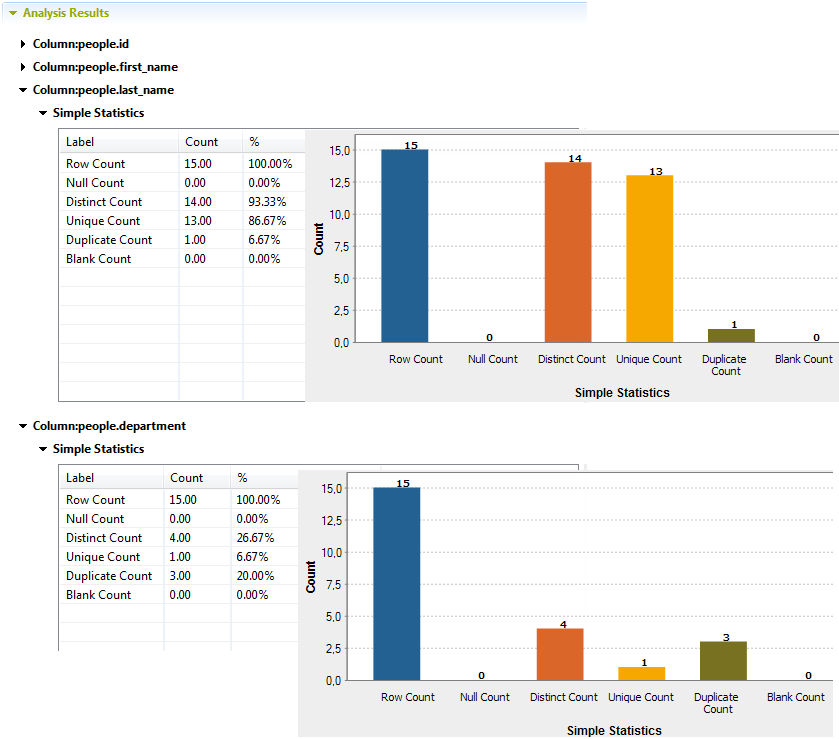

- Create a column analysis with simple indicators on the Hive table.

You can then modify the analysis settings and add other indicators as needed. You can also create other analyses later on this HDFS file by using the same Hive table.

- TXT

- CSV

- Parquet, with a flat structure

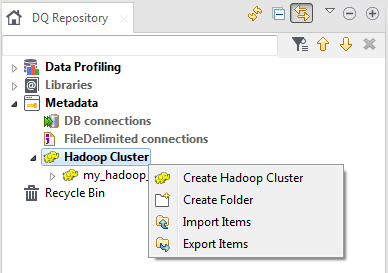

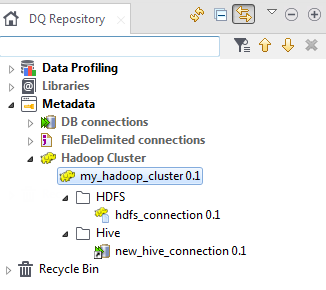

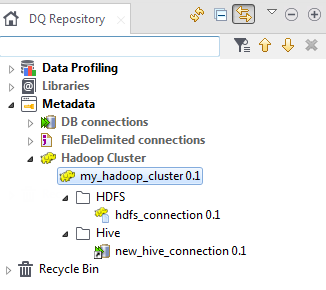

Creating a connection to a Hadoop cluster

Before you begin

- You have selected the Profiling perspective.

- You have the proper access permission to the Hadoop distribution and its HDFS.

Procedure

Results

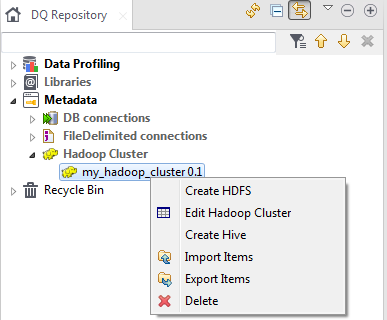

Creating a connection to Hive

Before you begin

You have created a connection to the Hadoop distribution.

Procedure

Results

For more information about creating Hive connections, see Centralizing Hive metadata.

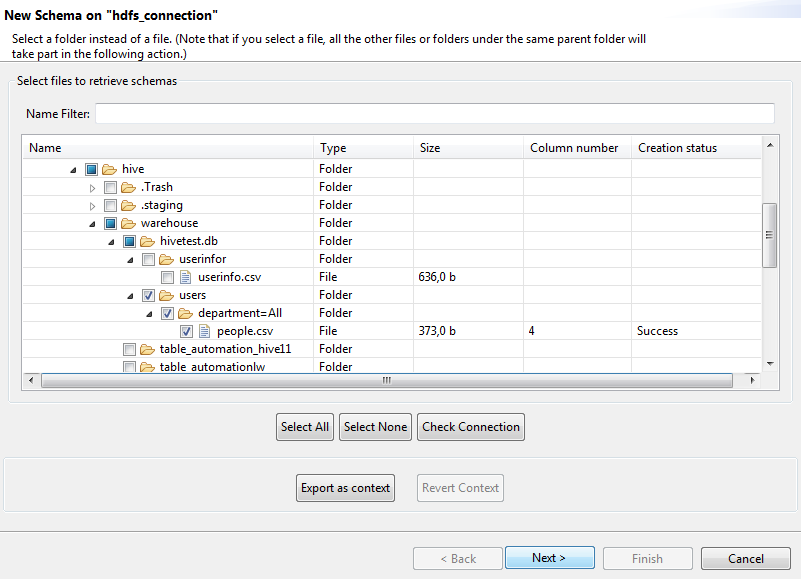

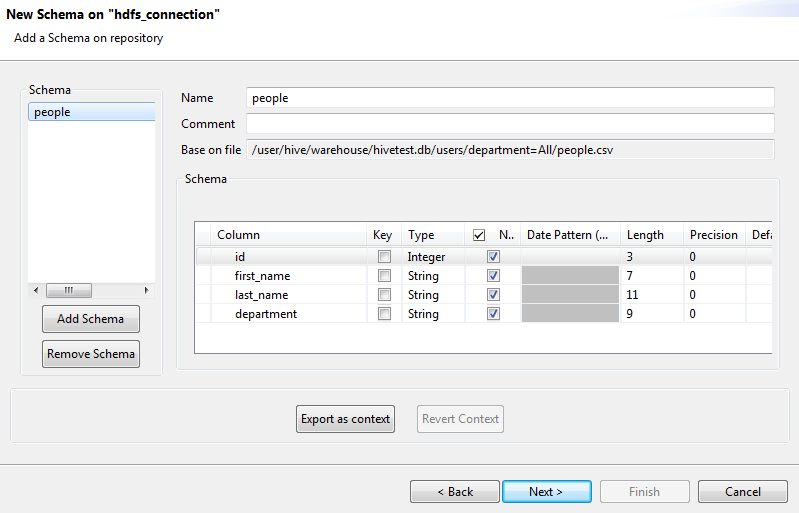

Creating a connection to an HDFS file

Before you begin

- You have selected the Profiling perspective.

- You have created a connection to the Hadoop distribution.

Procedure

Results

For more information about creating HDFS connections, see Centralizing HDFS metadata.

Creating a profiling analysis on the HDFS file via a Hive table

Before you begin

- You have selected the Profiling perspective.

- You have created a connection to the Hadoop distribution and the HDFS file.

About this task

- TXT

- CSV

- Parquet, with a flat structure