Editing the converted Job

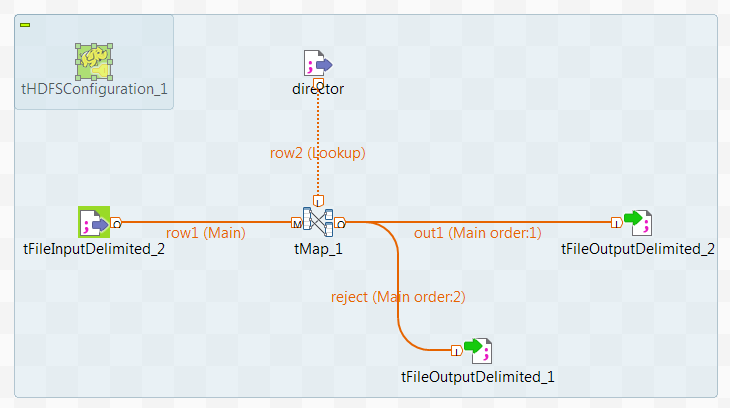

Update the components to finalize a data transformation process that runs in the Spark Streaming framework.

A Kafka cluster is used instead of the DBFS system to provide the streaming movie data to the Job. The director data is still ingested from DBFS in the lookup flow.

Before you begin

-

The Databricks cluster to be used has been properly configured and is running.

-

The administrator of the cluster has given read/write rights and permissions to the username to be used for the access to the related data and directories in DBFS and the Azure ADLS Gen2 storage system.

Procedure

Results

The Run view is automatically opened in the lower part of the Studio and shows the execution progress of this Job.

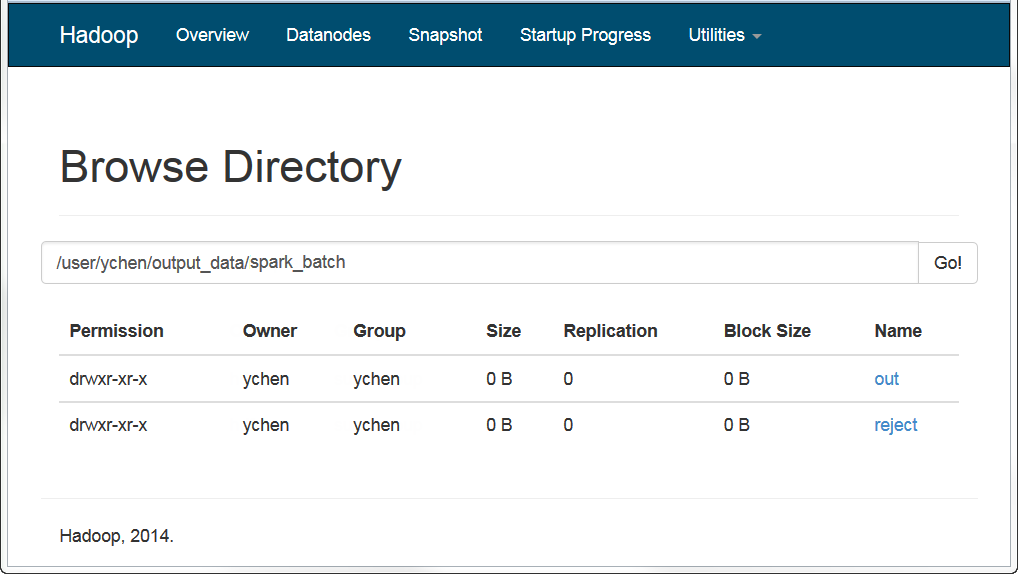

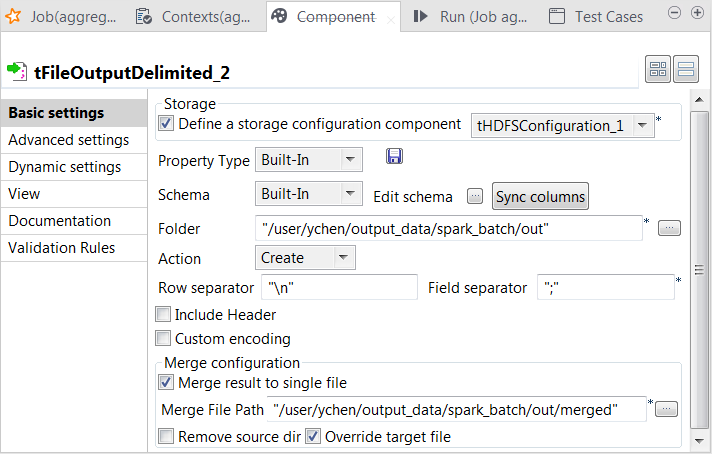

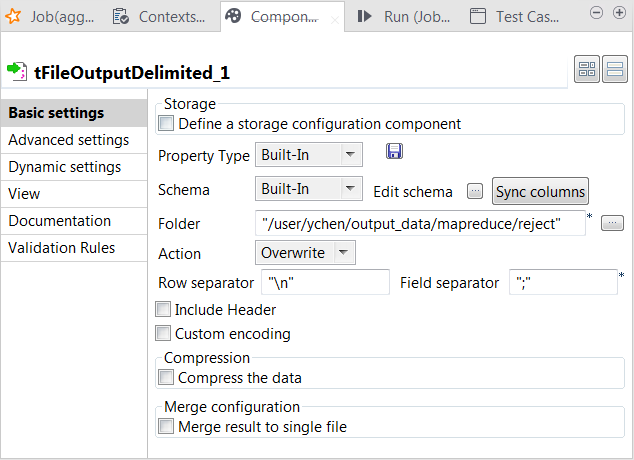

Once done, you can check, for example in the web console of your HDFS system, that the output has been written in HDFS.