OPENCONNECTOR: Secure copy (SCP/SSH) protocol

Use Case: A simple shell script can be invoked to Secure Copy (SCP) files implemented through OPENCONNECTOR inbound protocol to land flat files into HDFS LoadingDock for ingest. SCP is a protocol based on Secure Shell (SSH) used for securely transferring files between a local host and remote host or between two remote hosts. OPENCONNECTOR allows users to manage arguments and metadata as properties in the application so that environment setup and data loading executes like any other source.

entity.custom.script.args– core property

/root/custom/podium/put_file_hdfs.sh %prop.sfile %prop.starget %loadingDockLocation– core property value

/usr/local/podium/put_file_hdfs.sh– script to run

%prop.sfile – First argument the script will take: '%prop' tells the application to use the value set in property 'sfile' which specifies input path (which is defined as a property)

%prop.starget – Second argument the script will take: '%prop' tells the application to use the value set in property 'starget' which specifies target path (defined as a property)

%loadingDockLocation – Required argument that every OPENCONNECTOR must take; the application automatically generates this path value. The script will copy the value (loadingDockLocation) into this container.

The script in this example:

cat put_file_hdfs.sh

ssh root@ludwig.podiumdata.com hadoop fs -rm -skipTrash $2

ssh root@ludwig.podiumdata.com hadoop fs -copyFromLocal $1 $2

where $1 and $2 are positional arguments;

'skipTrash $2' command bypasses trash to delete any file located in $2 (URI);

'copyFromLocal $1 $2' command works similarly to 'put' command except that the source is restricted to a local file location:

(the first argument, $1=<localsrc>) is copied to specified resource identifier (the second argument, $2=<target URI>)

[Usage: #hdfs dfs -copyFromLocal <localsrc> <target URI>]

Step 1. Create new Source

Do the following:

- From Source module, open Add data wizard

- On first screen select Add New Data To new Source.

-

Create a connection for OPENCONNECTOR if one does not exist

[Connection Type: FILE, Protocol: OPENCONNECTOR, Connection String: “File:/”]

-

Fill in the following fields:

- Source Connection: Add the Open Connector Connection just created

- Add New Source Name: User-defined

- Default Entity Level: Define level of data management, default level is MANAGED (See AdministrationSystem Settings: Level Control)

- Source Hierarchy: User-defined

- Inbound Protocol: Pre-defined inbound protocol auto-populates for the selected source connection

- Base Directory: Can be configured or accept default (This is the directory where data will be stored in file system)

- Groups: Select Group(s) requiring access from the dropdown options. At least one group must be added for the data to be discoverable.

Creating a new source

Step 2. Create a new Entity

Do the following:

- Fill in the following fields:

- Entity Name: User-defined

- Entity Custom Script: A script automatically populates; it can be edited to something like:

- /usr/local/custom/put_file_hdfs.sh %prop.sfile %prop.starget %loadingDockLocation

- Data Format Specification: Users can only select NONE or FDL. This example uses FDL.

- example: Choose File: ConsumerComplaints.gz.txt

- Groups: Associate at least one group with the entity

- Select Generate Record Layout

Creating a new entity

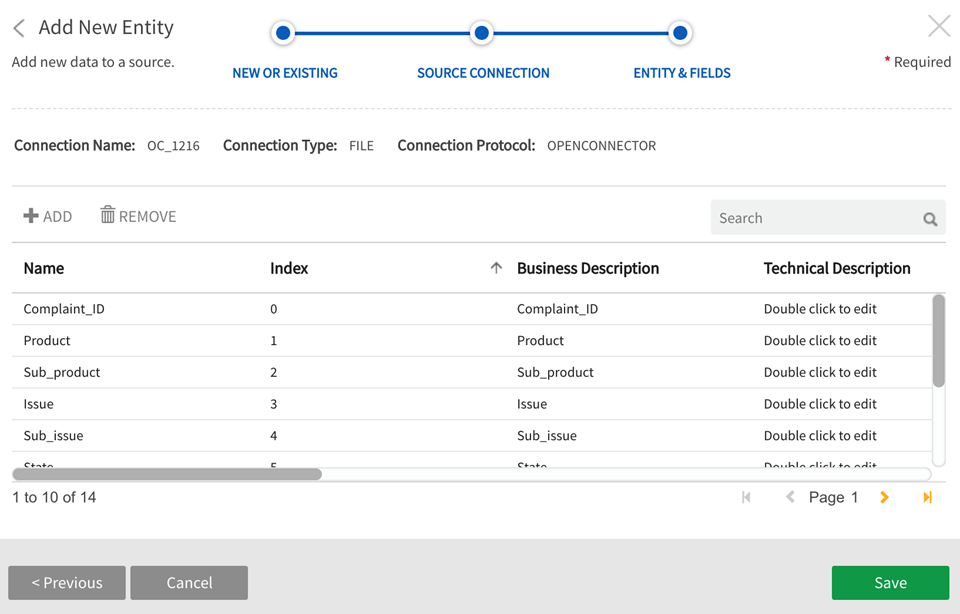

Step 3. Generate record layout, review file layout and field definition.

Do the following:

- Select Record Layout Preview Layouts include field Name, Index, Business Description, Technical Description.

- Make layout edits as required.

- Save the entity.

Generated record layout

Step 4. Modify properties adding “sfile” and “starget” property values

Before loading the data, locate the entity in source.

Add/modify the following properties:

- (add+) sfile:<path/to/sourcefile>

- (change) src.file.glob value to 'script'

- (add+) starget:<path/to/targetfile>

'sfile' + 'starget' properties can be created as entity properties. See Creating a property through API call: PUT /propDef/v1/save for details on creating these properties. See Sample Payload.3 +4 (OpenConnector properties).

Edit the new entity properties. Access properties by selecting  (view details) or View/Edit Properties through More dropdown.

(view details) or View/Edit Properties through More dropdown.

Input and target path examples:

sfile: /data2/consumer_complaints/*orig*

starget: /staging/Consumer_Complaints.csv.orig

Add values for input and target paths

Step 5. Load data on the entity

After saving ‘sfile’ and ‘starget’ property values, data can be loaded on the entity.

Loading data on the entity