New features

Application integration

| Feature | Description |

|---|---|

|

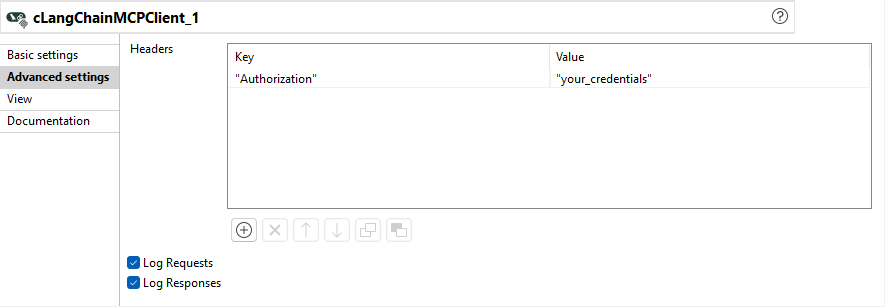

Support for Streamable HTTP transport and authentication to MCP servers in cLangChainMCPClient |

Streamable HTTP transport type is now supported in the cLangChainMCPClient

component. You can now specify your authentication information for MCP requests by adding

HTTP headers in the Headers table in the

Advanced settings view of cLangChainMCPClient. |

|

Support for OpenAI GPT 5 in cLangChainConnection |

OpenAI GPT 5 model is now supported in the cLangChainConnection component. |

| Improvements of cSQL | The cSQL component is improved with:

|

Big Data

| Feature | Description |

|---|---|

| Support for OAuth token federation authentication for Databricks | M2M and U2M authentications are now unified into OAuth2 authentication.

|

| Support for CDP Public Cloud 7.3 in Standard Jobs in HDFS components (Knox) | Apache Knox authentication is now available in

WebHDFS connections for compatible HDFS components. When

Knox is enabled, additional gateway configuration fields appear and standard

authentication options are hidden where applicable.

|

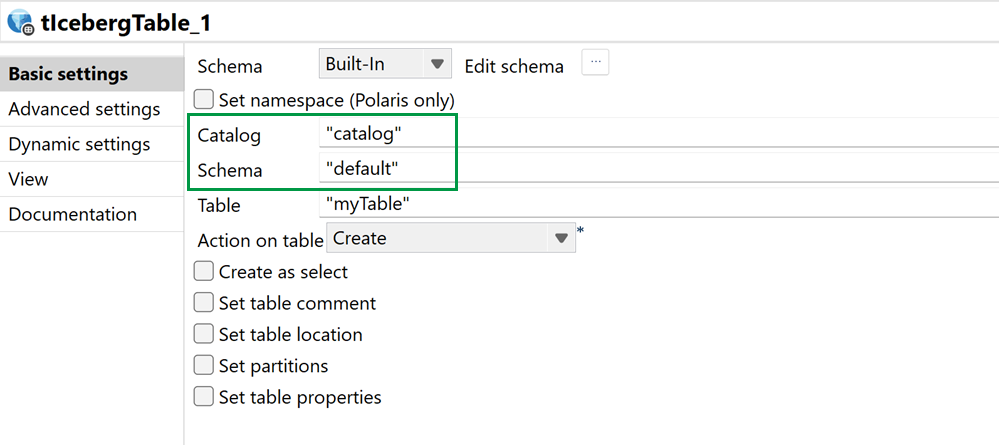

| Support for Iceberg managed tables with Unity Catalog | You can now use Unity Catalog to work with managed tables in Iceberg components.

|

Continuous Integration

| Feature | Description |

|---|---|

|

Upgrade of Talend CI Builder to version 8.0.27 |

Talend CI Builder is upgraded from version 8.0.26 to version 8.0.27. Use Talend CI Builder 8.0.27 in your CI commands or pipeline scripts from this monthly version onwards until a new version of Talend CI Builder is released. |

Data Integration

| Feature | Description |

|---|---|

|

New Data Stewardship components to connect to Qlik Talend Cloud and use sprints in Standard components |

The new tDSInput, tDSOutput, and tDSDelete components are now available and allow you to connect to Qlik Cloud Data Stewardship and use Qlik sprints. |

|

New tBedrockClient component to support Amazon Bedrock capabilities in Standard components |

The new tBedrockClient component allows integration with Amazon Bedrock models. |

|

Support for Workload identity federation to access Google Cloud resources for Google Storage and BigQuery components in Standard components |

The new option Workload Identity Federation has been added to Google Cloud services and BigQuery components as a new credential provider to access Google Cloud resources. |

|

Support for multiple Qlik environments for tQlikOutput in Standard components |

Having multiple Qlik environments is now supported in Qlik Cloud. Environments are displayed next to the Space field in the tQlikOutput component as"<space_name> (<environment_name>)", making it easier to distinguish spaces with identical names. |

|

New pagination techniques for tHTTPClient in Standard components |

Two new pagination techniques, Marker and Next link, have been added to the tHTTPClient component. |

|

New option to disable chunked encoding for tHTTPClient in Standard components |

The new option Disable chunked encoding has been added to the tHTTPClient component to disable Transfer-Encoding: chunked for outgoing HTTP requests with a body. |

Data Mapper

| Feature | Description |

|---|---|

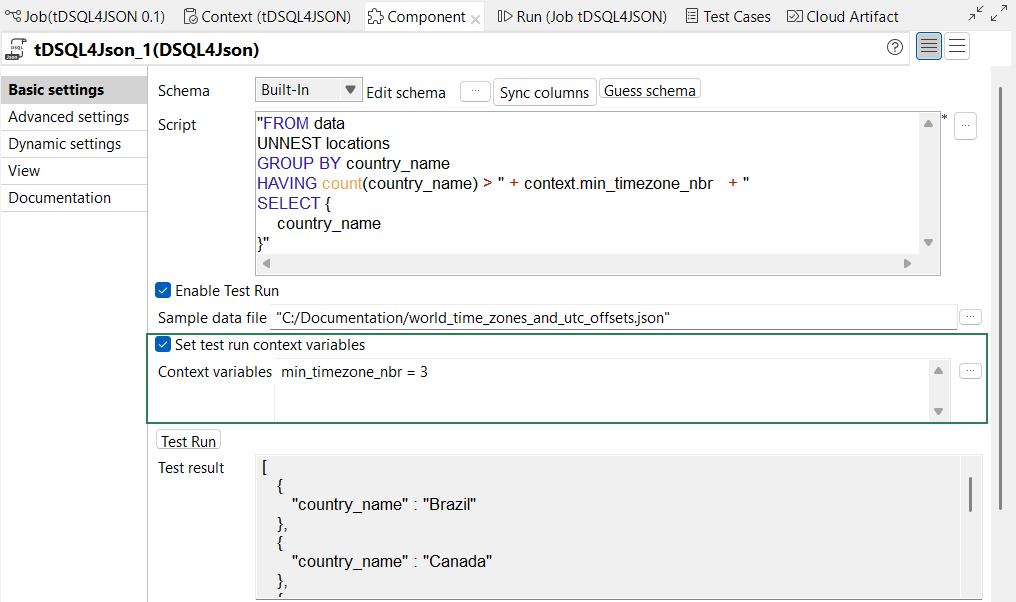

| Support for test run context variables in tDSQL4JSON | A new property, Set test run context variables, is

now available in the Basic settings view of tDSQL4JSON,

allowing you to define and use context variable values in your script for

Test Run executions.

|

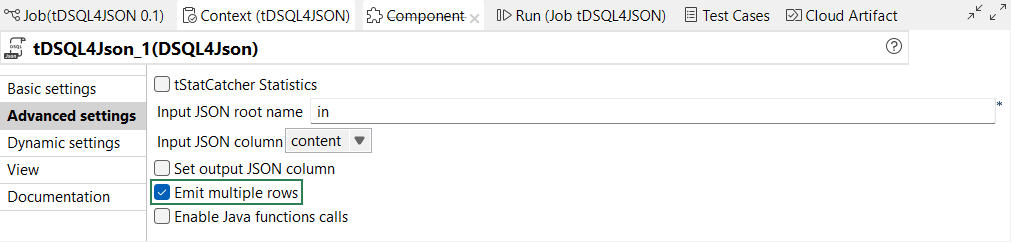

| Support for multiple output records in tDSQL4JSON | A new property, Emit multiple rows, is now available

in the Advanced settings view of tDSQL4JSON, allowing array

outputs to be split into separate rows during Job run.

|

| Support for multiple sources in cMap | You can now configure multiple sources in cMap, allowing a standard map to use inputs from one or more ReadURLs with sources from the Route exchange or file paths. |

Data Quality

| Feature | Description |

|---|---|

| Support for Snowflake to profile data in all analyses | You can now profile data in all types of analysis using a connection to Snowflake. |

| Support for semantic types in tDQRules | You can now use semantic types to validate your data in tDQRules. |