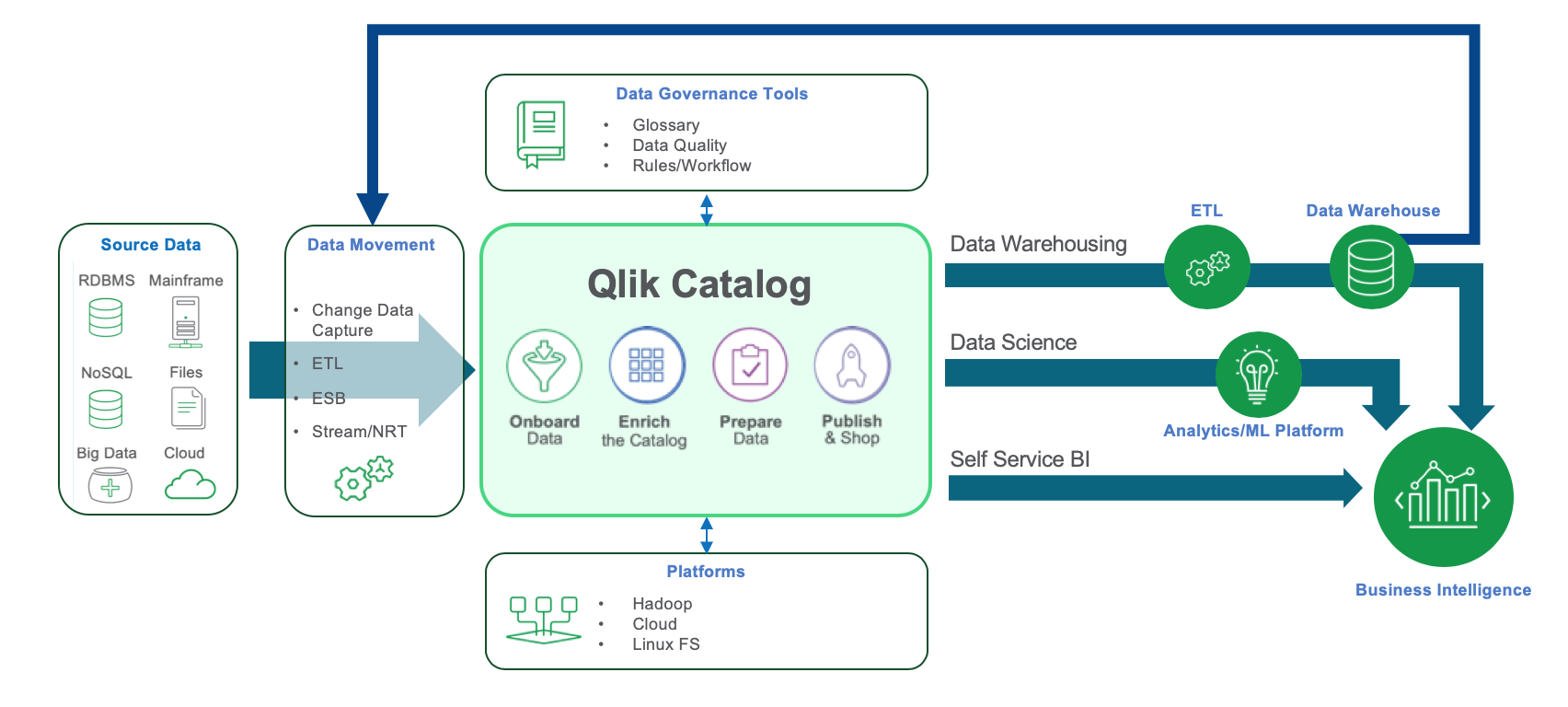

Architecture and data flow

Qlik Catalog empowers enterprises and users by instilling confidence in data. This is done by combining automated data ingestion, validation and profiling with enterprise-level transformation capabilities all from a centralized interface. Qlik Catalog maintains visibility throughout the data lifecycle—for example, tracking detailed ingest statistics and custom-built data transformations through lineage. The application has comprehensive and sophisticated data ingestion capabilities that support a variety of sources, including legacy structures such as Mainframe COBOL copybook and file formats such as XML and JSON. Datasets are stored in industry-standard formats, calculating profile statistics for every field and creating samples for each dataset. Data is also made available for querying through standard SQL engines. Depending on the underlying platform this can be Hive, Impala, or PostgreSQL.

The solution validates and profiles ingested records as good, bad, or ugly. An ugly record is one where some of the field data is problematic – it might be a string with control characters in it, mismatched data types, or non-numeric characters found in an integer field. A bad record does not conform to the specified record layout. For example, the record may have the incorrect number of columns, wrong or absent delimiters, or an embedded control character is causing a record break. Qlik Catalog ingest technology assures data validity and integrity by preventing bad and ugly records from presenting as good data.

Qlik Catalog enriches and stores technical and business metadata in a central catalog. From this logically ordered and secure environment, enterprise data is made available to the user community through a friendly interface that mimics shopping experience on an e-commerce site. Users can search, browse, preview, understand, compare, and find the most appropriate datasets from the overall marketplace collection and place them in a shopping cart. From there they can take action such as publishing data to their favorite analytic tool or delivering it to an external environment on-premises or in the cloud where it will be available for other applications to leverage.

Qlik Catalog offers two deployment options. Both are available on premises or in the cloud.

-

On an edge node of a Hadoop cluster. In this configuration it stores data in HDFS (Hadoop Distributed File System) or if deployed in the cloud, in cloud-native storage such as AWS S3. Data is exposed for querying via Hive or Impala query engines and processed on the cluster using standard parallel processing frameworks such as MapReduce, Tez, and Spark.

This deployment option fully leverages the scalability of the underlying parallel infrastructure and is suitable for high volumes of data and heavy user workloads.

-

On a single Linux server. In this configuration data is stored as files in the Linux file system and exposed for querying through PostgreSQL, leveraging the feature known as foreign tables.

This deployment option is suitable for more modest data volumes and workloads. This option can be especially attractive to organizations that do not have a need for higher data volumes or the expertise to manage a Hadoop environment. This deployment option provides the architectural foundation for the streamlined Qlik Catalog for QVDs offering focused on making QVDs easier to discover, understand, and consume.

Architecture and workflow