Using context variables with Cloudera

In this scenario, you want to choose where to run Spark Jobs between different Cloudera on-premises Runtimes 7.1.7 Spark 3.2.x and 7.1.9 Spark 3.3.x.

It is also relevant with a mix of Cloudera distributions on-premises (7.1.x) and Cloud (7.2.x).

This capability is enabled with Talend Studio context variable feature and with Qlik Spark Universal 3.3.x distribution mode (the latest for Cloudera distributions).

Before you begin

- Check in the Cloudera documentation whether your targets distributions are compatible with Spark 2, Spark 3 or both at the same time.

- From Cloudera Manager, download the client configuration for each Hadoop service used (HDFS, Hive, HBase...). For more information, see Downloading Client Configuration Files, from Cloudera documentation.

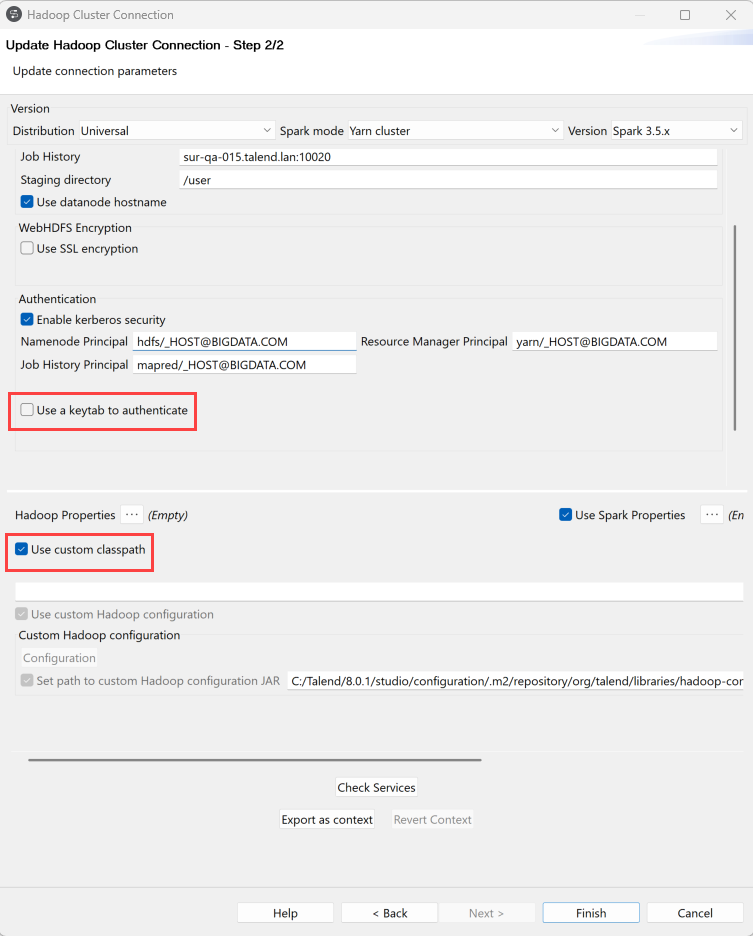

Creating a Metadata Connection to a Hadoop cluster

Procedure

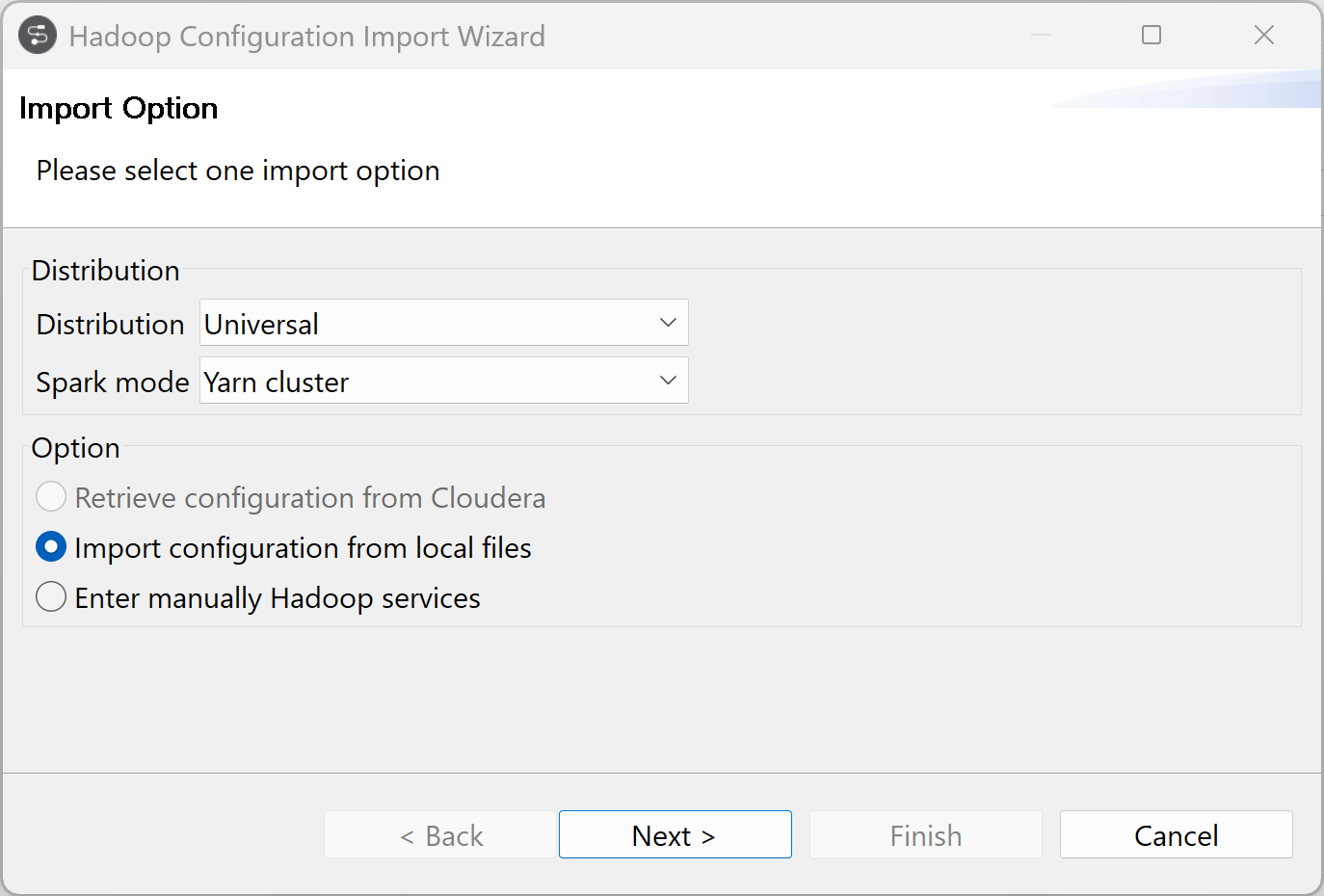

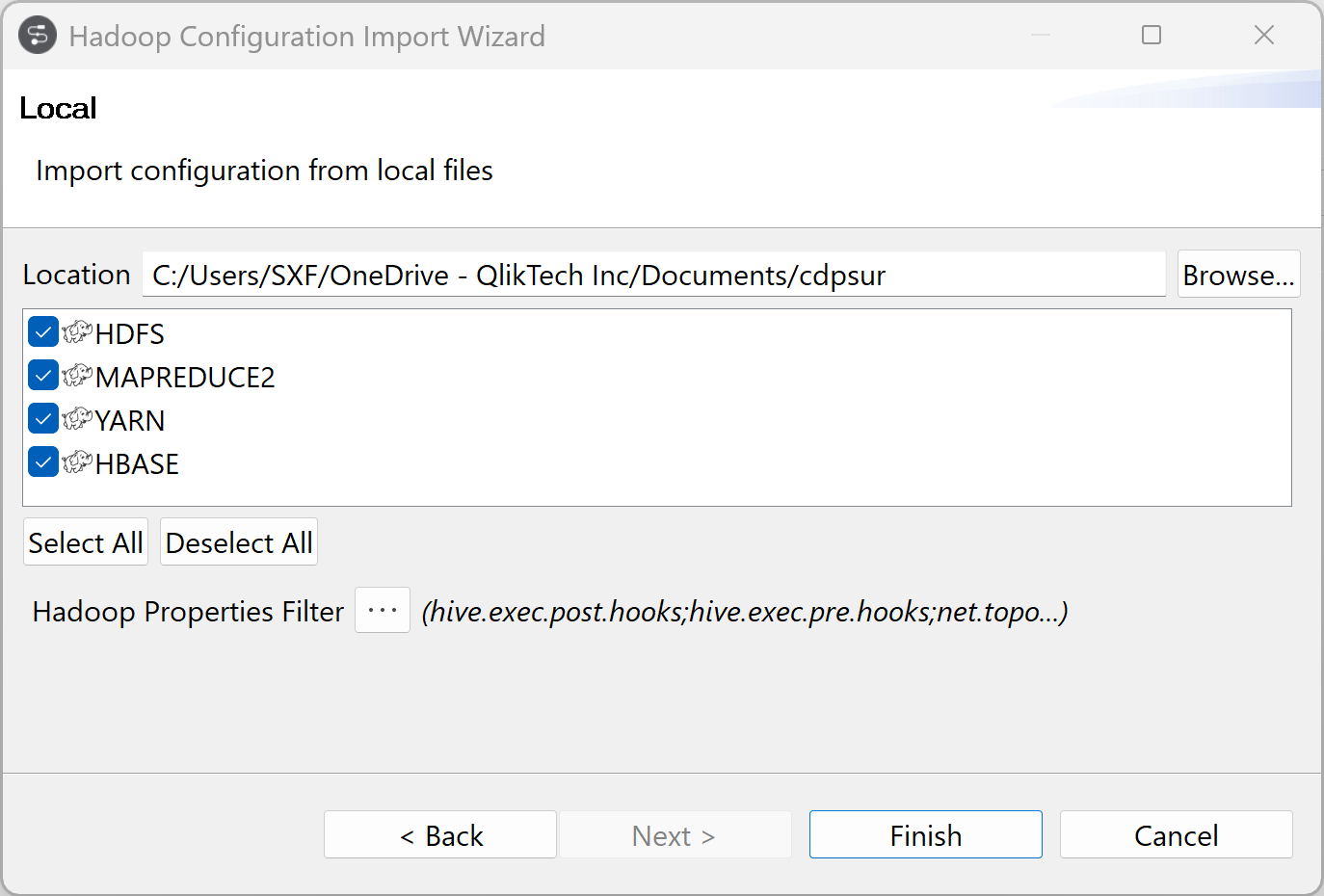

Importing Hadoop configuration

Procedure

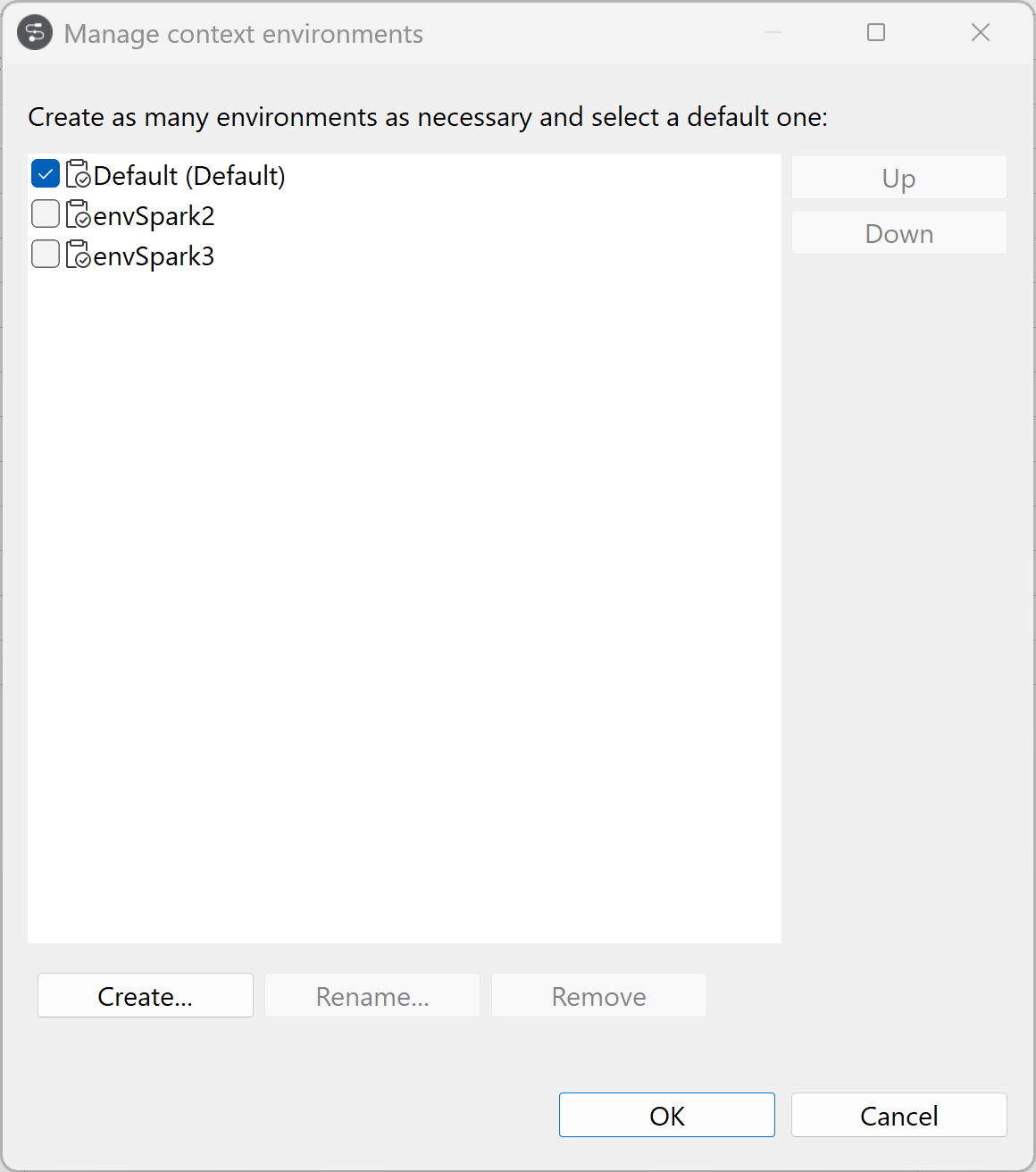

Contextualizing the Metadata connection

You are able to use a single cluster with different parameters thanks to

context values.