Uploading files to DBFS (Databricks File System)

Uploading a file to DBFS allows the Big Data Jobs to read and process it. DBFS is the Big Data file system to be used in this example.

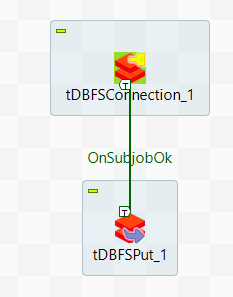

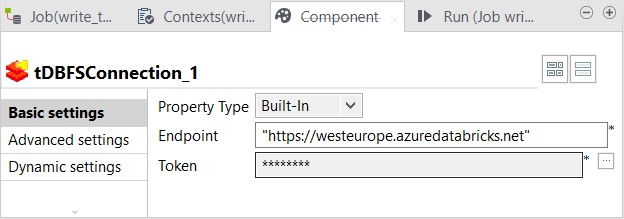

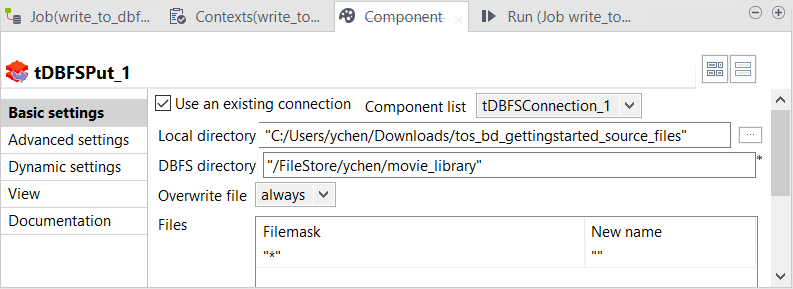

In this procedure, you will create a Job that writes data in your DBFS system. For the files needed for the use case, download tbd_gettingstarted_source_files.zip from the Downloads tab in the left panel of this page .

Procedure

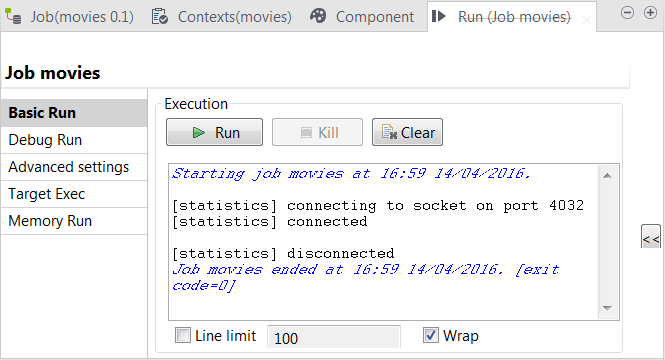

Results

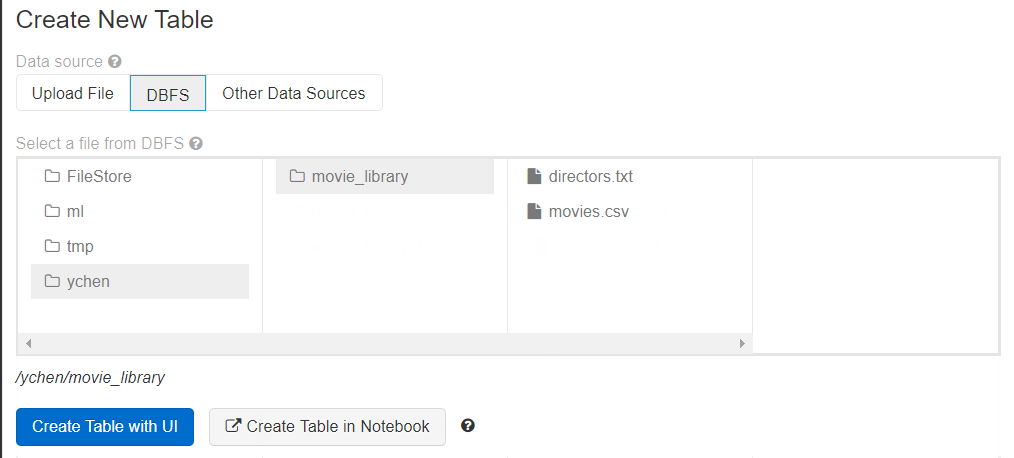

When the Job is done, the files you uploaded can be found in DBFS in the directory you have specified.