Using files instead of lists for iterating through lists of datasets or data inputs

The use case where a set of SQL queries need to be executed as part of a Talend Data Integration Job requires iterating through the list of SQL queries, either listed manually, for example using tFixedFlowInput component, or using an external template. This could break lineage as Talend Data Catalog cannot access SQL queries. It is a similar problem with a list of datasets.

The recommended approach is to use the tFileOutputXXX component for saving individual SQL statements in separate SQL script files. Then you can harvest the SQL scripts to trace lineage or do impact analysis.

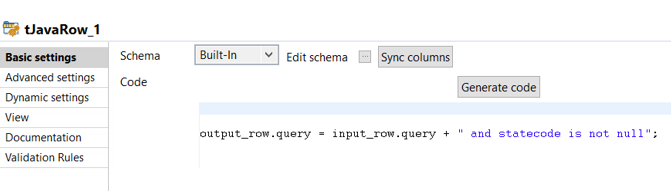

Take the example of a set of SQL update statements defined in tFixedFlowInput that are executed using tDBRow. Notice the flow of the Job in the screenshot below. There is no data input/output to this Talend Data Integration Job. It is intended to execute a set of SQL statements. For this kind of scenario, it is recommended to store the SQL statement into a .sql file, and harvest the formed SQL script for lineage.

Note that the tDBRow component is deactivated in the Job. It is not necessary to have an activated component while harvesting the Job in Talend Data Catalog, although it is needed for successful Job execution.

There are different bridges supported by Talend Data Catalog to harvest SQL scripts written for a specific database like Teradata, Microsoft SQL Server and so on. The Oracle bridge for the MySQL database is used in this example. The import settings are shown below:

Although a Talend Data Integration Job without the .sql file would execute the SQL statements (and implement a use case), it would be difficult to trace lineage for it.