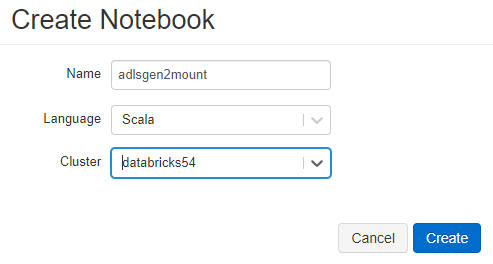

Mount the Azure Data Lake Storage Gen2 filesystem to be used to DBFS

Before you begin

- Ensure that you have grant your application the read-and-write permissions to your ADLS Gen2 filesystem.

Procedure

Results

If the ADLS Gen2 filesystem to be mounted contains some files, run this Notebook. Then you should see the data stored in the file specified by <file-location> in the lines appended in the last step.