Ingest from file system: Profile data

Rich and detailed profile data is captured by Qlik Catalog during ingestion—while the application summarizes this data in the UI, a lot of detail exists in the file system that can be ingested and also profiled. The following example describes the process when the data resides in the file system.

Administrators can leverage this data for reports and apply transforms like any other entity in the cluster.

Ingest of profile data from HDFS is setup using the Add Data wizard in the following example.

Step 1: Setup FILE_HDFS source connection

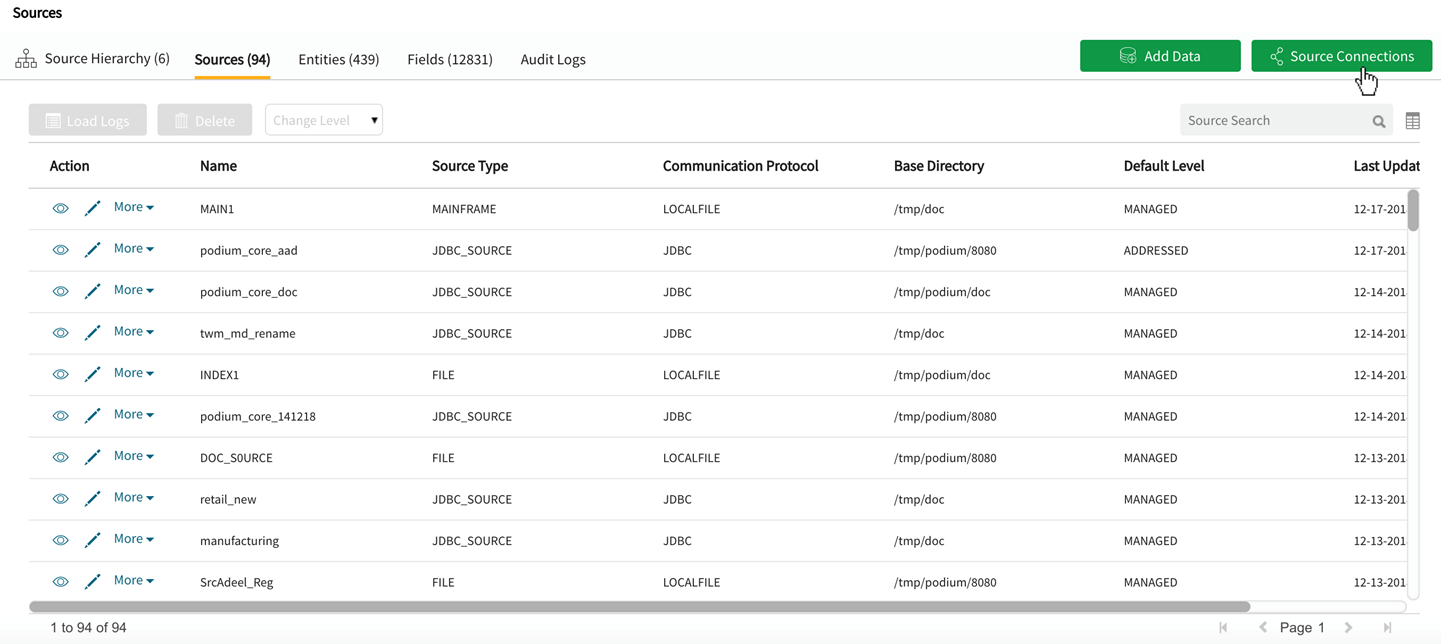

Create a new source connection by selecting Source Connections from sources grid in source module

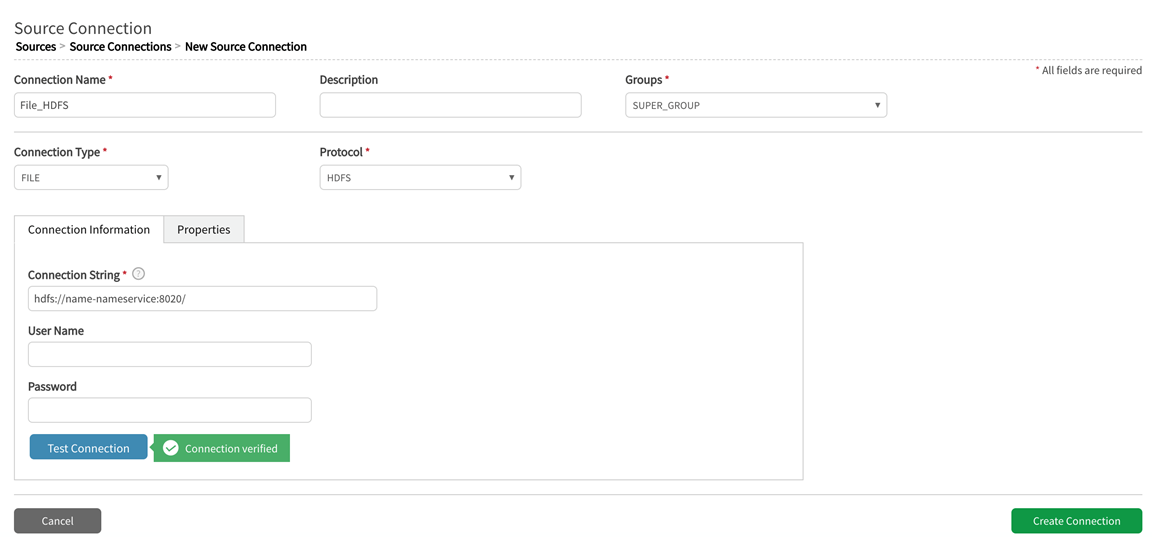

In source connection wizard specify the following fields:

Connection Name: User-defined

Description: User-defined

Connection Type: FILE

Protocol: HDFS

Connection String: Find connection URI value by selecting About link from Support dropdown menu in main navigation bar; locate the Settings tab and copy value for receiving.uri (example: hdfs://host-nameservice:8020/)

Upon creation of HDFS FILE Source Connection, initiate Add Data wizard

Step 2. Source Connection

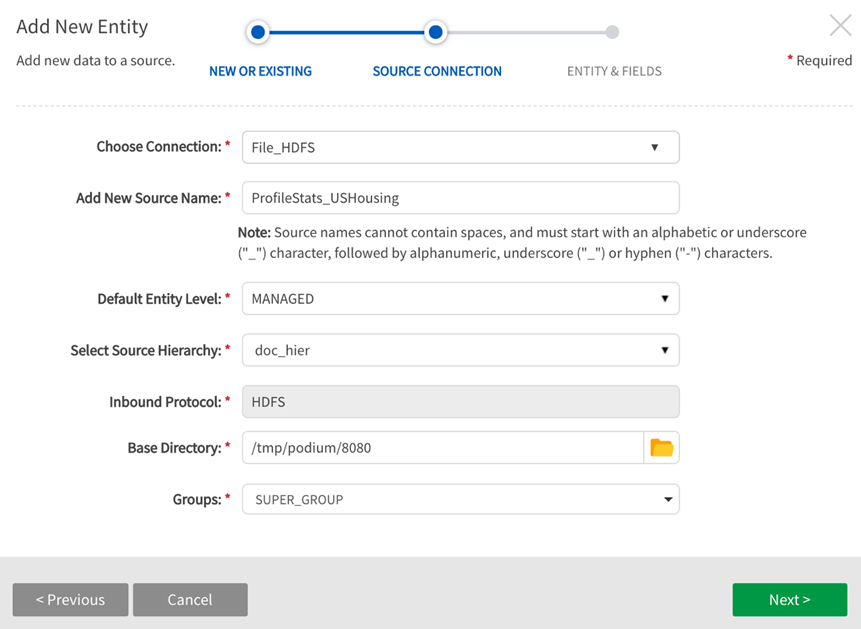

Select Add New Data: To new source

Choose Connection: Select the connection created in Step 1 where Connection Type: FILE Protocol: HDFS

Add New Source Name: Configurable. This is the source that the new entity will be added to.

Default Entity Level: Select Managed (see System Settings: Level Control)

Select Source Hierarchy: Configurable. Choose from dropdown.

Inbound Protocol: Pre-defined inbound protocol auto-populates for the selected source connection (in this case it is HDFS)

Base Directory: Configurable. (This is the directory where data will be stored in file system). Select Folder icon to browse HDFS directories. If creating a new folder, type in a new directory filepath and then select Ok, or select a pre-existing base folder.

Groups: Select group(s) requiring access from the dropdown options. At least one group must be added for the data to be discoverable.

Click Next.

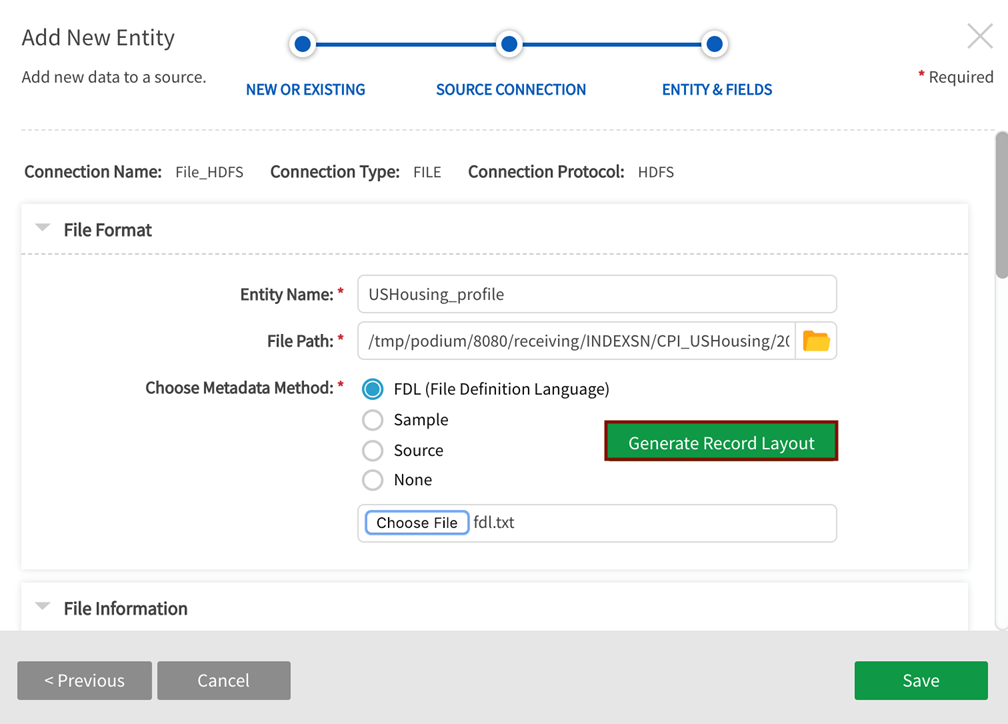

Step 3: Entity & Fields

Entity Name: Name the entity

File Path: Location of the profile data on HDFS. The file path follows the following path format: /<podiumbase_directory>/receiving/SOURCE_NAME/ENTITY_NAME/partition/profile/profile.txt

Example: HDFS discovery

[podium@nname-podium ~]$ hadoop fs -ls /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing

Found 3 items

drwxr-xr-x - podium superuser 0 2018-12-08 02:44 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306

drwxr-xr-x - podium superuser 0 2018-12-10 06:49 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181210064726

drwxr-xr-x - podium superuser 0 2018-12-10 06:53 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181210065143

[podium@nname-podium ~]$ hadoop fs -ls /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306

Found 6 items

-rw-r--r-- 3 podium superuser 0 2018-12-08 02:43 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/_SUCCESS

drwxr-xr-x - podium superuser 0 2018-12-08 02:43 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/good

drwxr-xr-x - podium superuser 0 2018-12-08 02:43 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/log

drwxr-xr-x - podium superuser 0 2018-12-08 02:44 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/profile

drwxr-xr-x - podium superuser 0 2018-12-08 02:43 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/sample

drwxr-xr-x - podium superuser 0 2018-12-08 02:43 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/splitprofile

[podium@name-podium ~]$ hadoop fs -ls /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/profile

Found 2 items

-rw-r--r-- 3 podium superuser 0 2018-12-08 02:44 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/profile/_SUCCESS

-rw-r--r-- 3 podium superuser 32876 2018-12-08 02:44 /tmp/podium/8080/receiving/INDEXSN/CPI_USHousing/20181208024306/profile/profile.txt

Data Format Specification: Select radio button for Source to extract metadata from the entity specified in Entity File Path or use the FDL text located at the end of this topic (copy and paste into a .txt file) and chose FDL Metadata Method.

Once Metadata Method is specified, select Generate Record Layout

Generate Record Layout with FDL Metadata Method

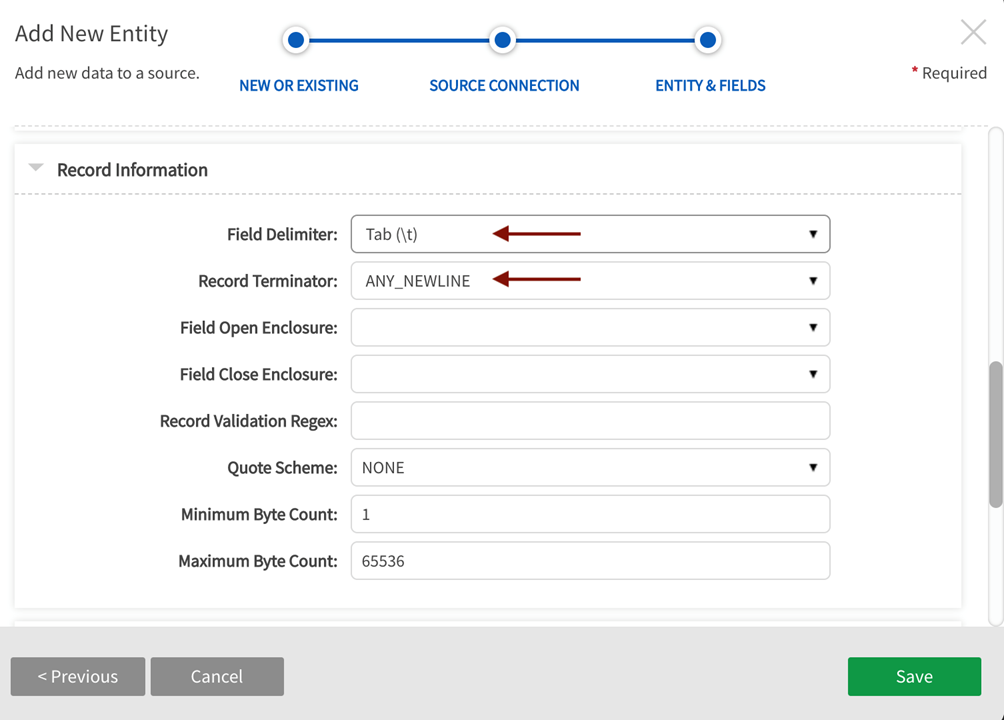

Step 4: Modify record layout

Scroll to Record Information and select Field Delimiter value <Tab (\t)> if it is not selected by default. Select Record Terminator value <ANY_NEWLINE> if it is not selected by default.

Save the Entity.

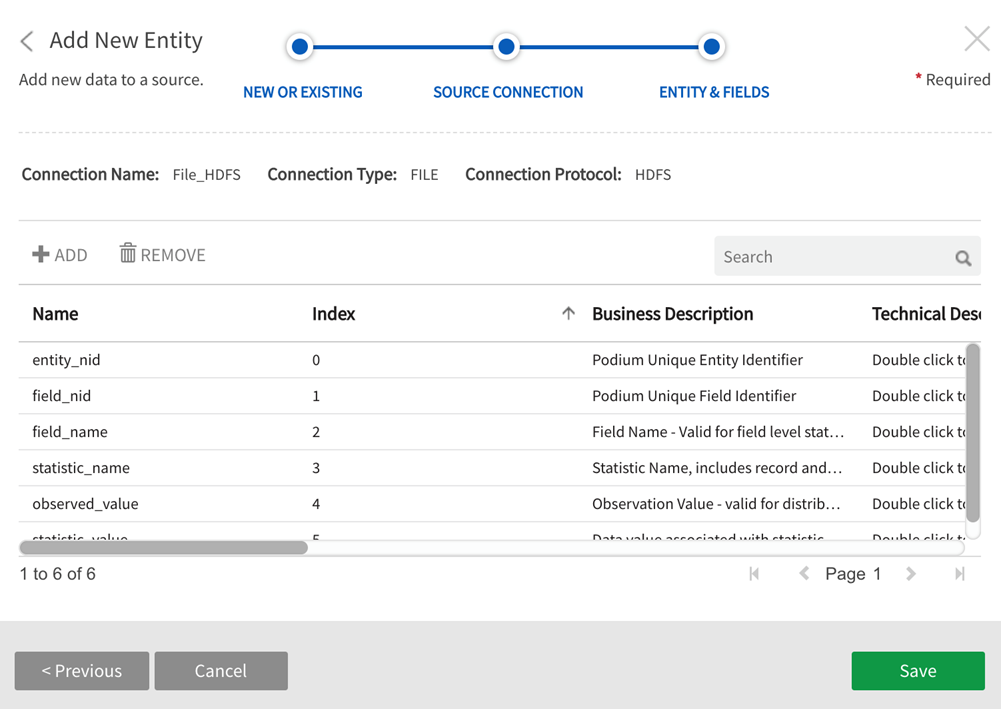

Step 5: Record layout preview

Scroll to the bottom of File Format Specification sections and select Record Layout Preview.

Field metadata displays in preview (field names may not display).Connection Information includes Connection Name, Connection Type, Connection Protocol. Entity metadata columns include: Name, Index, Business Description, Technical Description, Data Type, Required, Encrypted at Source, Encrypt, Key, Validation Regex, Foreign Key.

Savethe entity.

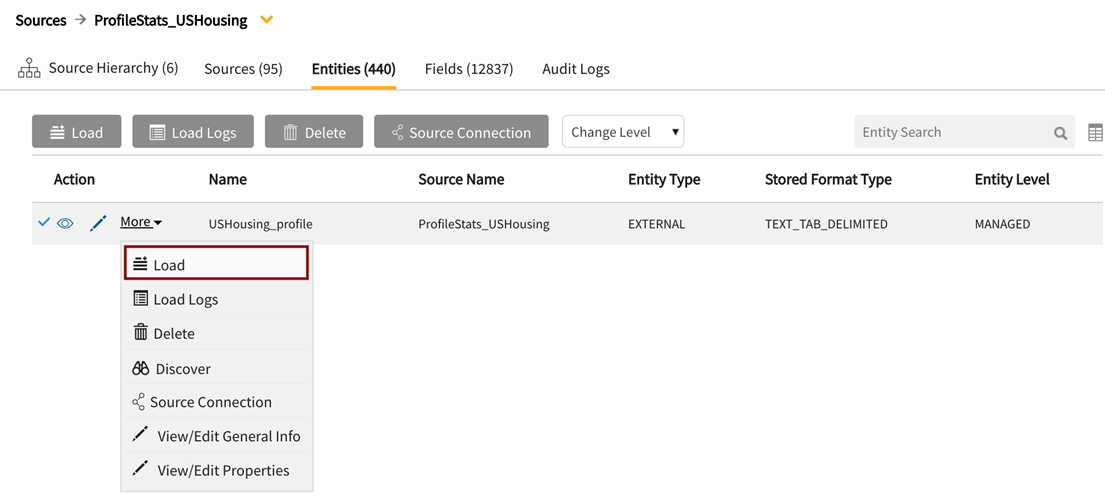

Step 6: Load data to the entity

Select Load from More dropdown to load data to the entity.

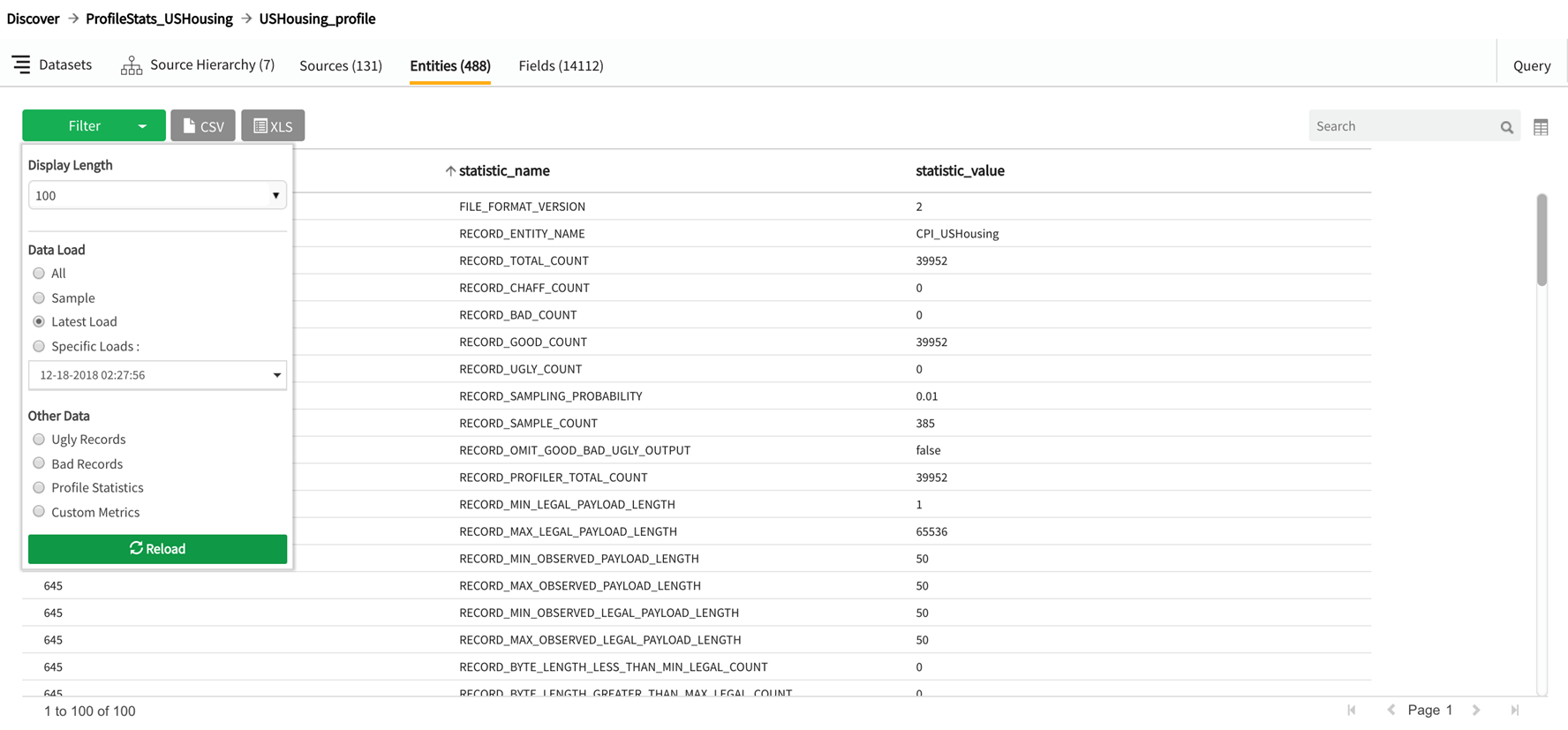

Results for this example are shown below (From discover grid on the entity row, select  Sample Data, then view Data Load: Latest Load).

Sample Data, then view Data Load: Latest Load).

FDL Text for Save/Upload (example)

If preferred, users can use FDL to define metadata for profile data as an alternative to using the entity filepath. Profile data FDL can be found below: Copy and paste and save on local machine as something like FDL.txt file. Select and upload this file on Step 2 of the Add Data wizard (Data Format Specification).

####################################

############ FIELD INFO #############

####################################

# Enter information about each field in following format - one field description per line

# field.n=FIELD_NAME | INDEX | BUSINESS_DESCRIPTION | DATA_TYPE | LENGTH | SCALE | PRECISION | NOT_NULL | IS_ENCRYPTED_AT_SRC | DO_ENCRYPT | IS_KEY | DO_NOT_PROCESS | TRIM | VALIDATION_REGEX | OPEN_QUOTE | CLOSE_QUOTE

# where n=index i.e.1,2,3…..

# FIELD_NAME: Name of the column; column can be part of a group in which case the multiple parts of name are separated by dot ‘.’ - Required attribute and a value must be provided.

# INDEX: Position or index of field in record - Required attribute and a value must be provided.

# BUSINESS_DESCRIPTION: Business description

# DATA_TYPE: Data type of field. Valid values are: INTEGER, DECIMAL, DATE, SYMBOL, STRING, CLOB, BOOLEAN

# LENGTH: Length of field. Required attribute if the value of RECORD.FIELD_DELIMITER IS NULL.

# PRECISION: Precision

# SCALE: Scale

# NOT_NULL: If the field is required or not. Valid values are TRUE, FALSE. Default value is FALSE.

# IS_ENCRYPTED_AT_SRC: If the field data is encrypted at source or not. Valid values are TRUE, FALSE. Default value is FALSE.

# DO_ENCRYPT: Is the field data to be encrypted by the system or not. Valid values are TRUE, FALSE. Default value is FALSE.

# IS_KEY: Is the field part of the record key. Valid values are TRUE, FALSE. Default value is FALSE.

# DO_NOT_PROCESS: Ignore the field during processing. Valid values are TRUE, FALSE. Default value is FALSE.

# NO_TRIM: No not trim leading & trailing whitespace from the field. Valid values are TRUE, FALSE. Default value is FALSE.

# VALIDATION_REGEX: Regular expression to be used for field value validation.Default value is empty String

# NULL_PROXY: Control value that evaluates and converts qualifying values to null

field.entity_nid =entity_nid | 0 |Podium Unique Entity Identifier | INTEGER | 6 | 6 | 0 | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE |

field.field_nid =field_nid | 1 |Podium Unique Field Identifier | INTEGER | 6 | 6 | 0 | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE |

field.field_name =field_name | 2 |Field Name - Valid for field level statistics | STRING | 40| | | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE |

field.statistic_name =statistic_name | 3 |Statistic Name, includes record and field level stats | STRING | 80| | | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE |

field.observed_value =observed_value | 4 |Observation Value - valid for distribution of values only | STRING | 40| | | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE |

field.statistic_value =statistic_value | 5 |Data value associated with statistic name | STRING | 90| | | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE |